Luxonis OAK cameras combine efficient edge AI processing with flexible deployment options. One powerful new feature on the OAK4 cameras is the ability to tune the camera's performance using configurable power profiles.

Adjusting the power profile is especially useful in situations where the camera may slow down due to thermal throttling after extended use. It allows users to choose which component—CPU or neural network—should be prioritized or sacrificed. With adequate airflow and a cooler environment, the camera can operate more consistently and maintain stable performance.

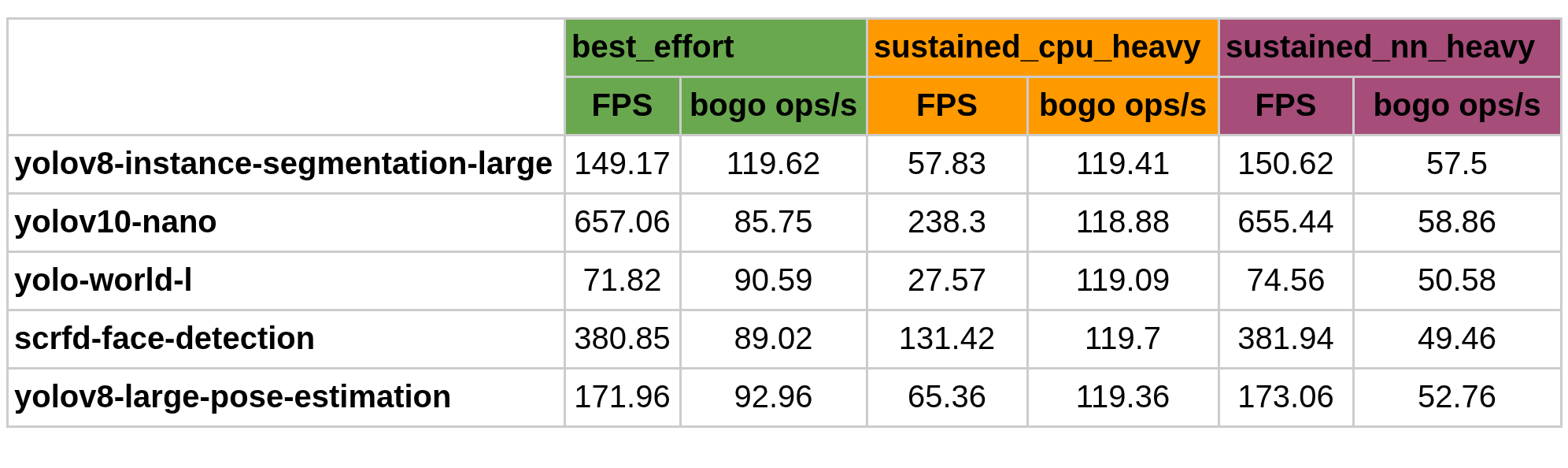

In this post, we benchmarked five neural networks across three available power profiles to understand how inference speed and CPU efficiency are affected - while stressing the CPU at 100% using the stress-ng tool in the background to simulate a heavy-load scenario.

⚠️ Power profile selection is only supported on OAK-4 series cameras, which is why all tests in this benchmark were run on an OAK-4D.

Power Profiles Available:

best_effort

sustained_cpu_heavy

sustained_nn_heavy

Benchmark Results (Under Full CPU Load)

What Does “Bogo Ops/s (usr+sys)” Measure?

It gives an estimate of how many CPU operations per second are executed. It's calculated as:

bogo ops/s = total bogo ops / (user time + system time)

Interpreting results

1. best_effort

Balances both NN and CPU tasks fairly well

Yields high inference FPS

Also maintains higher CPU performance than sustained_nn_heavy in most cases

2. sustained_nn_heavy

Prioritizes consistent NN inferences per second

Often produces the highest FPS

Comes at the cost of CPU efficiency, which drops notably under stress

3. sustained_cpu_heavy

Returns the lowest FPS, especially for NN-heavy tasks

In exchange, it offers excellent CPU efficiency, making it useful in CPU-bound or multitasking applications

Measurement accuracy

FPS (Frames Per Second) values were collected using the modelconverter benchmark tool and have an approximate ±10% margin of error.

CPU efficiency (bogo ops/s) was measured using stress-ng output under user+system time. However, it's important to treat these numbers as observational only, not precise performance metrics.

⚠️ Disclaimer from stress-ng Documentation:

“Note that these are not a reliable metric of performance or throughput and have not been designed to be used for benchmarking whatsoever. The metrics are just a useful way to observe how a system behaves when under various kinds of load.”

Reproducing the Benchmark

These benchmarks were run on:

All results reflect system behavior under full camera CPU stress, ensuring realistic assessment for multitasking or high-load deployments.

1. Clone the Benchmarking Toolkit

git clone https://github.com/luxonis/modelconverter.git

cd modelconverter/

python3 -m venv venv

source venv/bin/activate

pip install -r requirements-bench.txt --extra-index-url https://artifacts.luxonis.com/artifactory/luxonis-python-release-local/

pip install modelconv[bench]

2. Set Up SSH and API Key

Passwordless ssh access was needed to the camera

ssh-copy-id root@<INSERT_CAM_IP_HERE>

Where <INSERT_CAM_IP_HERE> should be replaced with active camera IP.

And Luxonis Hub API key was obtained by making a free account on https://hub.luxonis.com/, then going to Team Settings -> Create API Key and API key was copied. Then it was set up as an environment variable in the active terminal:

export HUBAI_API_KEY=<INSERT_API_KEY_HERE>

3. Creating the Power Profile Benchmark Script

Inside a file named power_profiles_benchmark.sh:

#!/bin/bash

DEVICE_IP=$1

PROFILES=("best_effort" "sustained_cpu_heavy" "sustained_nn_heavy")

NN_MODELS=("luxonis/yolov8-instance-segmentation-large:coco-640x352"

"luxonis/yolov10-nano:coco-512x288"

"luxonis/yolo-world-l:640x640-host-decoding"

"luxonis/scrfd-face-detection:10g-640x640"

"luxonis/yolov8-large-pose-estimation:coco-640x352")

run_benchmark() {

local PROFILE=$1

local NN_MODEL=$2

local CPU_STRING=$3

ssh root@"$DEVICE_IP" agentconfd power-switch --profile "$PROFILE" >/dev/null

echo "#################### Benchmarking with $PROFILE $CPU_STRING #####################"

modelconverter benchmark rvc4 --device-ip "$DEVICE_IP" --model-path "$NN_MODEL" | grep -A 3 -B 2 fps

}

for NN_MODEL in "${NN_MODELS[@]}"; do

# Run benchmark per profile without CPU load

for PROFILE in "${PROFILES[@]}"; do

run_benchmark $PROFILE $NN_MODEL "without CPU load"

done

# Run benchmark per profile with CPU load

for PROFILE in "${PROFILES[@]}"; do

ssh root@$DEVICE_IP docker run --rm jfleach/docker-arm-stress-ng -c 0 -l 100 --metrics | grep -E "cpu |stressor " &

run_benchmark $PROFILE $NN_MODEL "with 100% CPU load"

ssh root@$DEVICE_IP pkill stress-ng

done

done

Making it executable:

chmod +x power_profiles_benchmark.sh

4. Running the Benchmark

./power_profiles_benchmark.sh <INSERT_CAM_IP_HERE>

⚙️ Pro Tip: Switching Power Profiles Manually

You can manually change the camera’s power profile via SSH using the following command:

agentconfd power-switch --profile best_effort

Replace best_effort with any of the supported profiles:

best_effort

sustained_cpu_heavy

sustained_nn_heavy

Make sure you're SSH-ed into the camera when running this command. This is useful for quick experiments, diagnostics, or dynamic tuning in development workflows.

Models Tested

luxonis/yolov8-instance-segmentation-large:coco-640x352

luxonis/yolov10-nano:coco-512x288

luxonis/yolo-world-l:640x640-host-decoding

luxonis/scrfd-face-detection:10g-640x640

luxonis/yolov8-large-pose-estimation:coco-640x352

Conclusion

Power profiles offer valuable flexibility when deploying AI at the edge. This benchmark shows that selecting the right profile depends on whether you need raw inference throughput or CPU headroom - especially under heavy system load for prolonged periods when thermal throttling becomes an issue.

All benchmarks were run under full CPU load, making these results relevant for real-world applications in robotics, surveillance, and multitasking systems.

We encourage you to test these profiles with your own workloads. Results may vary depending on model type, host resources, and system load.

Have feedback or results of your own? Join us at Luxonis Forum and share your findings!