I am using a Luxonis OAK-FFC-4P module with OV9782 W sensors connected at B and C ports. My goal is to generate a depth map from this stereo pair. I will be using the default un-distortion method through enableUndistortion to reduce the FOV and get undistorted image feed. Hoping this extra layer of processing will not bother a much for the depth calculations.

I followed the official documentation on Configuring Stereo Depth and came up with the code attached.

Additional Information:

Stereo baseline: 5 cm (same as Intel RealSense baseline)

Distance from object: ~45 cm

Use cases: VIO, obstacle avoidance, and other drone applications

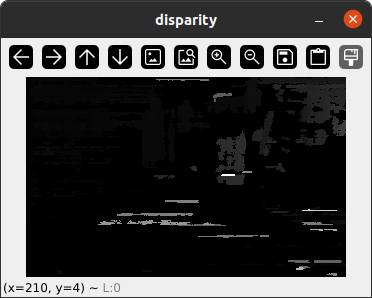

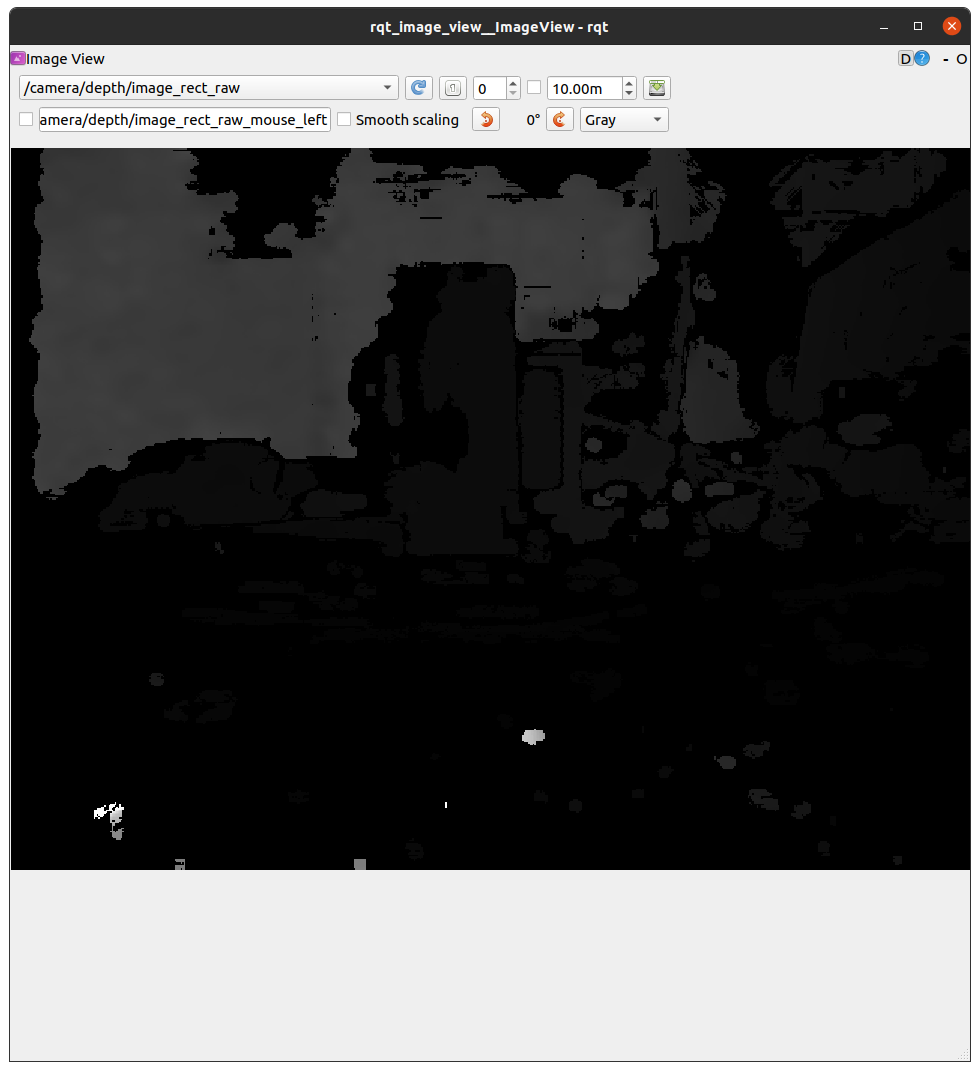

Observed issue: Disparity map from OAK is noisy and inaccurate, unlike the D435i output.

However, the depth output quality is far from expected. To validate this, I ran a comparison against an Intel RealSense D435i connected to the same PC, with both devices placed at the same distance (approx. 45 cm from a water bottle used as the target object). For a fair comparison, I disabled the infrared emitter on the D435i.

The depth results from the RealSense are significantly more reliable, at least visually and stable, than those from the OAK module. I’ve attached images of the depth maps from both devices for reference.

Depth map from OAK Module:

Infra Feed from OAK Module:

Realsense Depth Map:

Code:

#!/usr/bin/env python3

import cv2

import depthai as dai

import numpy as np

pipeline = dai.Pipeline()

monoLeft = pipeline.create(dai.node.Camera).build(dai.CameraBoardSocket.CAM_B)

monoRight = pipeline.create(dai.node.Camera).build(dai.CameraBoardSocket.CAM_C)

stereo = pipeline.create(dai.node.StereoDepth)

# Linking

monoLeft.initialControl.setSharpness(2)

monoLeft.initialControl.setLumaDenoise(1)

monoLeft.initialControl.setChromaDenoise(4)

monoLeft.initialControl.setAntiBandingMode(dai.CameraControl.AntiBandingMode.MAINS_50_HZ)

monoRight.initialControl.setSharpness(2)

monoRight.initialControl.setLumaDenoise(1)

monoRight.initialControl.setChromaDenoise(4)

monoRight.initialControl.setAntiBandingMode(dai.CameraControl.AntiBandingMode.MAINS_50_HZ)

monoLeftOut = monoLeft.requestOutput(

size=(640,400),

type=dai.ImgFrame.Type.NV12,

resizeMode=dai.ImgResizeMode.CROP,

enableUndistortion=True,

fps=30

)

monoRightOut = monoRight.requestOutput(

size=(640,400),

type=dai.ImgFrame.Type.NV12,

resizeMode=dai.ImgResizeMode.CROP,

enableUndistortion=True,

fps=30

)

monoLeftOut.link(stereo.left)

monoRightOut.link(stereo.right)

stereo.setRectification(True)

stereo.setExtendedDisparity(True)

stereo.setLeftRightCheck(True)

stereo.setSubpixel(False)

stereo.setSubpixelFractionalBits(4)

# stereo.initialConfig.setConfidenceThreshold(0)

stereo.PresetMode(5)

stereo.setBaseline(5)

stereo.setPostProcessingHardwareResources(3, 3)

stereo.initialConfig.setMedianFilter(dai.StereoDepthConfig.MedianFilter.KERNEL_3x3)

stereo.initialConfig.postProcessing.temporalFilter.enable = True

stereo.initialConfig.postProcessing.temporalFilter.alpha = 0.5

stereo.initialConfig.postProcessing.temporalFilter.delta = 3

stereo.initialConfig.postProcessing.speckleFilter.enable = True

stereo.initialConfig.postProcessing.speckleFilter.speckleRange = 12

stereo.initialConfig.postProcessing.spatialFilter.enable = True

stereo.initialConfig.postProcessing.spatialFilter.alpha = 0.5

stereo.initialConfig.postProcessing.spatialFilter.delta = 3

stereo.initialConfig.postProcessing.spatialFilter.holeFillingRadius = 2

stereo.initialConfig.postProcessing.spatialFilter.numIterations = 1

stereo.initialConfig.postProcessing.decimationFilter.decimationFactor = 2

# stereo.initialConfig.postProcessing.thresholdFilter.minRange = 400

# stereo.initialConfig.postProcessing.thresholdFilter.maxRange = 15000

syncedLeftQueue = stereo.syncedLeft.createOutputQueue()

syncedRightQueue = stereo.syncedRight.createOutputQueue()

disparityQueue = stereo.disparity.createOutputQueue()

colorMap = cv2.applyColorMap(np.arange(256, dtype=np.uint8), cv2.COLORMAP_JET)

colorMap[0] = [0, 0, 0] # to make zero-disparity pixels black

with pipeline:

pipeline.start()

maxDisparity = 1

while pipeline.isRunning():

leftSynced = syncedLeftQueue.get()

rightSynced = syncedRightQueue.get()

disparity = disparityQueue.get()

assert isinstance(leftSynced, dai.ImgFrame)

assert isinstance(rightSynced, dai.ImgFrame)

assert isinstance(disparity, dai.ImgFrame)

cv2.imshow("left", leftSynced.getCvFrame())

cv2.imshow("right", rightSynced.getCvFrame())

npDisparity = disparity.getFrame()

maxDisparity = max(maxDisparity, np.max(npDisparity))

# Display disparity as greyscale instead of color

greyscaleDisparity = ((npDisparity / maxDisparity) * 255).astype(np.uint8)

cv2.imshow("disparity", greyscaleDisparity)

key = cv2.waitKey(1)

if key == ord('q'):

pipeline.stop()

break

Questions for the Luxonis Team

Is there any sensor-specific tuning required for OV9782 W modules to improve stereo depth quality?

Is the 5 cm baseline setup fully supported for stereo depth on the OAK FFC 4P?

Are there additional calibration or parameter tweaks (e.g., Subpixel mode, confidence threshold, median filter, or other advanced configs) that I should try?

Is there a recommended pipeline template for getting the best stereo performance from OV9782 sensors on OAK FFC 4P?

Is the current performance limitation hardware-related (sensor choice, baseline, etc.) or software/tuning-related?

When subpixel is turned with median filter, it ends up with below errors:

[18443010313351F500] [3.4] [4.993] [StereoDepth(2)] [error] Maximum disparity value '3040' exceeds the maximum supported '1024' by median filter. Disabling median filter!

[18443010313351F500] [3.4] [5.046] [StereoDepth(2)] [error] Maximum disparity value '3040' exceeds the maximum supported '1024' by median filter. Disabling median filter!

This issue is opened to understand if I’ve missed any configuration steps or if there are known limitations with OV9782 stereo modules compared to Realsense.

Looking forward to any guidance, best practices, or sample configurations from the Luxonis team.