Dear Support Team,

I am currently working on a project involving RGB and depth data fusion using an OAK-D-PRO-POE (OpenCV AI Kit) camera. During integration, I encountered a critical issue regarding misalignment between the RGB and depth images, which significantly affects downstream tasks such as semantic segmentation projection and 3D reconstruction.

Problem Description

When fusing RGB and depth images from the OAK camera, we observe persistent misalignment, especially near the edges of the frame. This becomes particularly problematic when projecting semantic segmentation results from the RGB image onto the depth point cloud — object boundaries often become lost, reducing the accuracy of 3D reconstruction and object recognition.

Solutions Attempted

Following the recommendations in the official Luxonis documentation ( https://docs.luxonis.com/software/perception/rgb-d ), we have attempted the following alignment methods:

setDepthAlign() method- The

ImageAlign interface

- Manual undistortion and mapping techniques

Unfortunately, none of these methods were able to eliminate the alignment error between the RGB and depth images. Upon closer inspection, we also observed similar misalignment in the example images provided in the official documentation. For instance, in Figure 1, the projected range of the depth image is noticeably larger than that of the RGB image. This leads to a boundary mismatch where the depth image appears to be “inflated” around the edges compared to the RGB image.

Practical Verification

To further illustrate the alignment issue, we conducted a comparative test with the following steps:

- Captured the raw RGB image, the RGB image after manual undistortion, and the corresponding depth image (see Figure 2).

- Converted each set of RGB and depth images into 3D point clouds for visualization.

The results are shown in Figure 3:

- Left: Point cloud generated using the raw (unundistorted) RGB image.

- Right: Point cloud generated using the undistorted RGB image.

Test Results

Our experimental results indicate the following:

- The RGB image covers a noticeably smaller area than the actual physical object, leading to incomplete overlap between RGB segmentation results and the corresponding depth map.

- This issue is especially prominent near the image boundaries, where segmentation masks fail to fully cover the target objects.

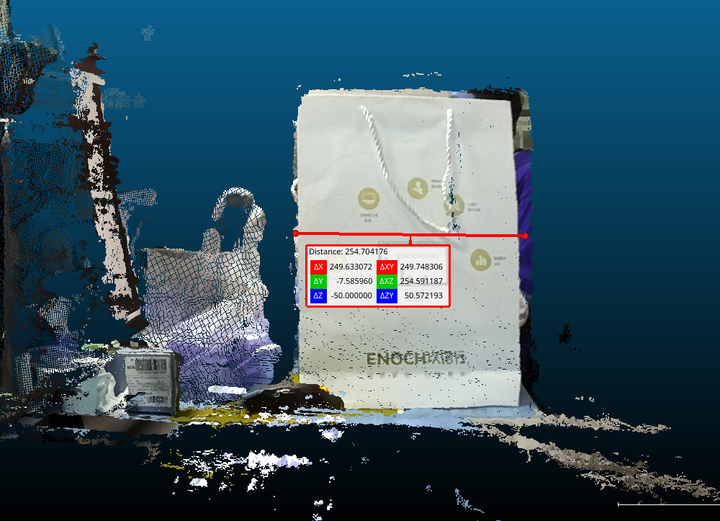

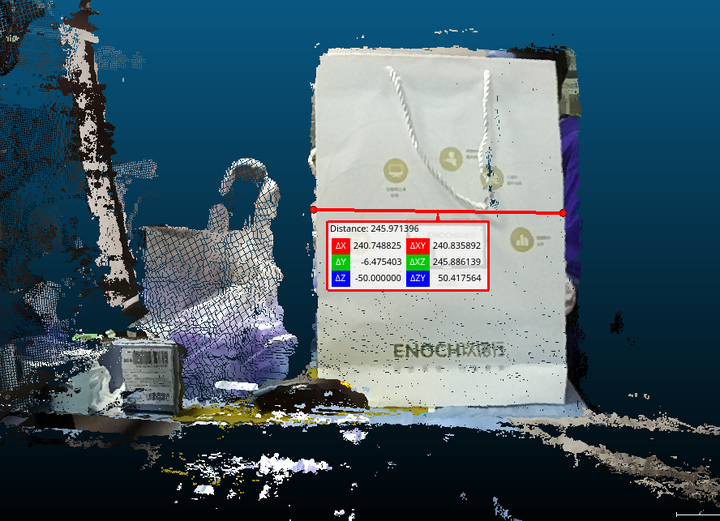

- As shown in Figure 4, the misalignment can reach up to approximately 10 mm in close-range scenarios.

- This level of offset poses a significant challenge for high-precision applications, particularly where objects occupy a large portion of the field of view — the larger the object appears in the image, the more noticeable the error becomes.

Request for Feedback

Could you please help clarify the following points regarding the RGB-Depth alignment issue with the OAK camera:

- Does the current OAK hardware have inherent limitations in native RGB-Depth calibration?

- Could you provide guidance or solutions to mitigate it?

Additionally, I would like to ask how to adjust the depth quality well when shooting weakly textured side shots of objects.

Thank you.

Below is the pipeline I used; please correct any possible errors:

import depthai as dai

from datetime import timedelta

def create_pipeline():

pipeline = dai.Pipeline()

MONO_RES = dai.MonoCameraProperties.SensorResolution.THE_720_P

# Mono cameras setup

monoLeft = pipeline.create(dai.node.MonoCamera)

monoLeft.setResolution(MONO_RES)

monoLeft.setBoardSocket(dai.CameraBoardSocket.LEFT)

monoLeft.setFps(15)

monoRight = pipeline.create(dai.node.MonoCamera)

monoRight.setResolution(MONO_RES)

monoRight.setBoardSocket(dai.CameraBoardSocket.RIGHT)

monoRight.setFps(15)

# Stereo depth setup

stereo = pipeline.create(dai.node.StereoDepth)

stereo.setDefaultProfilePreset(dai.node.StereoDepth.PresetMode.HIGH_DENSITY)

stereo.setDepthAlign(dai.CameraBoardSocket.RGB) # Align depth to RGB resolution

stereo.setOutputSize(1920, 1080) # Set output size to match RGB (if needed)

stereo.setNumFramesPool(10)

stereo.setLeftRightCheck(True)

stereo.setSubpixel(True)

stereo.setExtendedDisparity(False)

stereo.setMedianFilter(dai.MedianFilter.KERNEL_7x7)

stereo.setConfidenceThreshold(220)

# Color camera setup

colorCam = pipeline.create(dai.node.ColorCamera)

colorCam.setBoardSocket(dai.CameraBoardSocket.RGB)

colorCam.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

colorCam.setIspScale(1, 1) # Disable ISP scaling

colorCam.setInterleaved(False) # Planar format for better processing

colorCam.setFps(15)

# Synchronization setup

sync = pipeline.create(dai.node.Sync)

sync.setSyncThreshold(timedelta(milliseconds=15)) # Sync RGB and depth within 15ms

# Linking nodes

colorCam.isp.link(sync.inputs["rgb"])

stereo.depth.link(sync.inputs["depth"])

# Output streams

xoutSync = pipeline.create(dai.node.XLinkOut)

xoutSync.setStreamName("rgbd")

sync.out.link(xoutSync.input)

xoutLeft = pipeline.create(dai.node.XLinkOut)

xoutLeft.setStreamName("left")

monoLeft.out.link(xoutLeft.input)

# Connect mono cameras to stereo

monoLeft.out.link(stereo.left)

monoRight.out.link(stereo.right)

return pipeline