Hello,

I recently purchased an Oak-D Pro model and was tinkering with getting RGB-D and object detection on it at the same time, and when I try to do that, my pipeline crashes with the following message:

RuntimeError: NeuralNetwork: Blob compiled for 8 shaves, but only 7 are available in current configuration.

NeuralNetwork allocated resources: shaves: [0-6] cmx slices: [0-6]

ColorCamera allocated resources: no shaves; cmx slices: [13-15]

StereoDepth allocated resources: shaves: [10-13] cmx slices: [7-12]

ImageManip allocated resources: shaves: [15-15] no cmx slices.

This is strange to me as I see that we use 0-6 and then 10-13 and then 15-15. What happened to 7-9 and 14? Why can we not use those SHAVES?

Obviously I could recompile the neural network to use 7 SHAVES, but that would result in a loss in performance, which I do not want to do.

Any suggestions to better utilize the SHAVES?

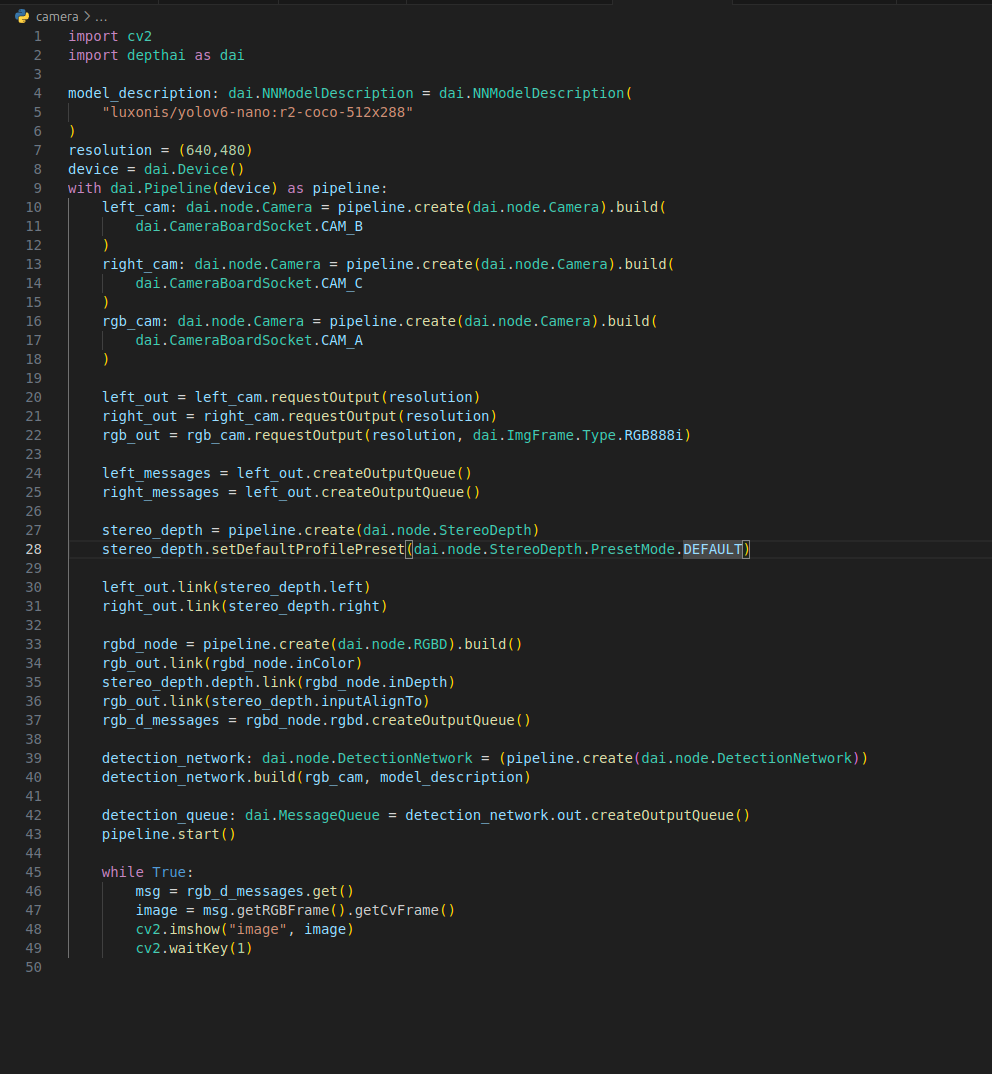

Here is the code I am running:

Or as the pasted version (Formatting weird)

import cv2

import depthai as dai

model_description: dai.NNModelDescription = dai.NNModelDescription( "luxonis/yolov6-nano:r2-coco-512x288")resolution = (640,480)device = dai.Device()with dai.Pipeline(device) as pipeline: left_cam: dai.node.Camera = pipeline.create(dai.node.Camera).build( dai.CameraBoardSocket.CAM_B ) right_cam: dai.node.Camera = pipeline.create(dai.node.Camera).build( dai.CameraBoardSocket.CAM_C ) rgb_cam: dai.node.Camera = pipeline.create(dai.node.Camera).build( dai.CameraBoardSocket.CAM_A )

left_out = left_cam.requestOutput(resolution) right_out = right_cam.requestOutput(resolution) rgb_out = rgb_cam.requestOutput(resolution, dai.ImgFrame.Type.RGB888i)

left_messages = left_out.createOutputQueue() right_messages = left_out.createOutputQueue() stereo_depth = pipeline.create(dai.node.StereoDepth) stereo_depth.setDefaultProfilePreset(dai.node.StereoDepth.PresetMode.DEFAULT) left_out.link(stereo_depth.left) right_out.link(stereo_depth.right) rgbd_node = pipeline.create(dai.node.RGBD).build() rgb_out.link(rgbd_node.inColor) stereo_depth.depth.link(rgbd_node.inDepth) rgb_out.link(stereo_depth.inputAlignTo) rgb_d_messages = rgbd_node.rgbd.createOutputQueue()

detection_network: dai.node.DetectionNetwork = (pipeline.create(dai.node.DetectionNetwork)) detection_network.build(rgb_cam, model_description)

detection_queue: dai.MessageQueue = detection_network.out.createOutputQueue() pipeline.start()

while True: msg = rgb_d_messages.get() image = msg.getRGBFrame().getCvFrame() cv2.imshow("image", image) cv2.waitKey(1)

Thanks in advance.