In this blog post we have evaluated the Factor Perception SDK for VIO/SLAM for OAK-D (Wide).

Here's a quick demo of 3D mapping a shopping mall:

Factor Perception SDK

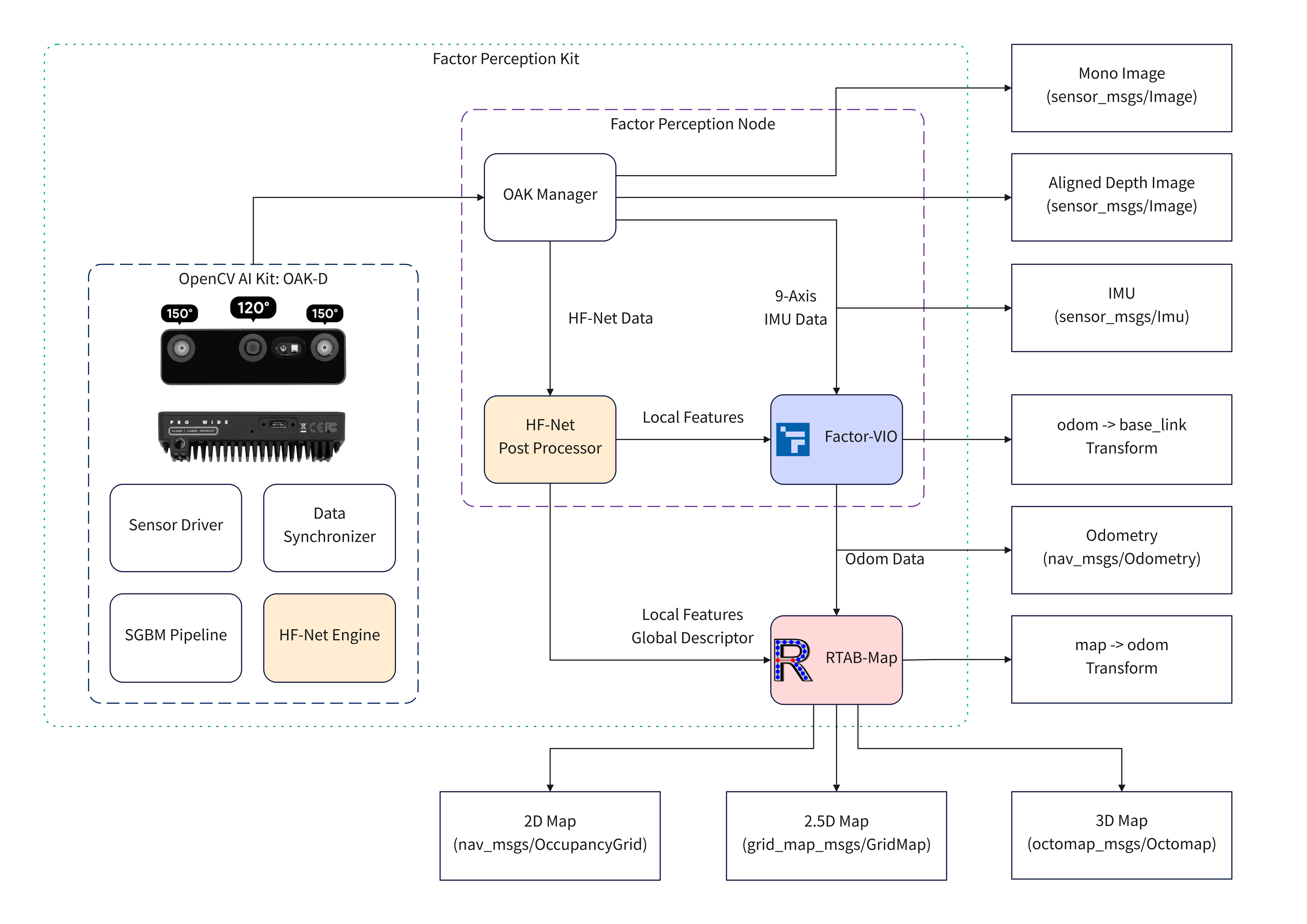

Factor Perception SDK is a third-party SDK (not developed by Luxonis), and below

is a diagram of its architecture. It's using RTAB-Map for SLAM, and HF-Net NN model to run VIO efficiently on the edge. Our evaluation was done with 1.1 version of the SDK.

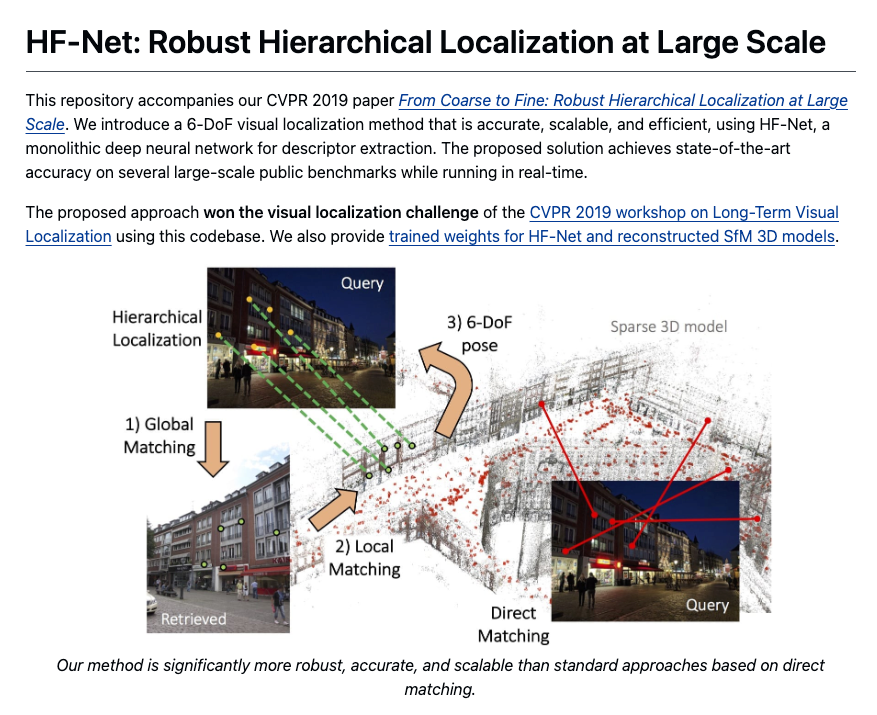

HF-Net

Factor Perception SDK runs HF-Net NN model (paper's repo, depthai-hf-net demo) on-device, which helps offload computational workload from the host computer to the camera.

Evaluation results

We have noticed that while X/Y drift is minimal, there is some Z-drift, about 1m in the shopping mall video. This could be solved by adding Z-drift constraints to the SLAM algorithm if you operate only on flat areas (eg. a warehouse, not multiple layers), or with graph optimizations.