Hi there!

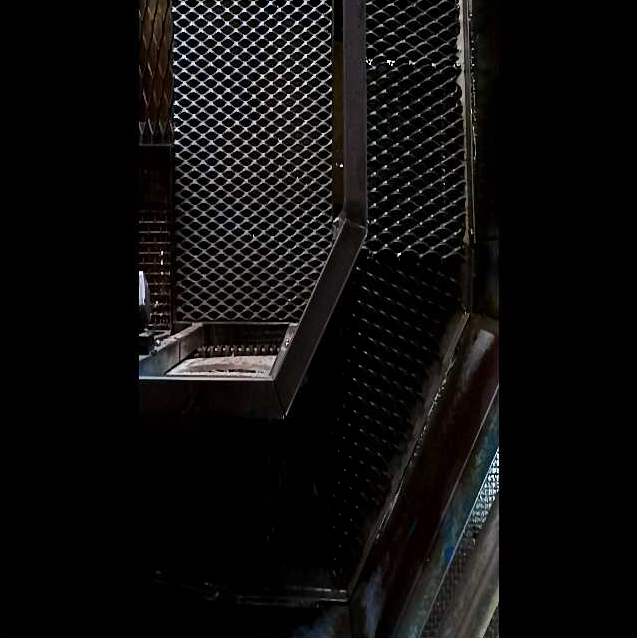

I'd like to maximise my POV before feeding it into my YOLO model. At the moment, when I feed it in, it just defaults to cropping the centre 640x640. This isn't very useful. When I resize it, the image looks like this:

However, I'd like to remove the letterboxing and instead squeeze the vertical (originally horizontal, but vertical now because I've rotated it 90 degrees) so that it theoretically ends up something like this (poor quality mockup done in MS paint).

Basically, what I'm trying to do is to vertically squeeze the image rather than letterbox it (horizontally).

This is my ImageManip config so far.

image_manip = pipeline.create(dai.node.ImageManip)

image_manip.initialConfig.setKeepAspectRatio(False)

image_manip.initialConfig.setResize(640, 640) # Resize to 640x640

image_manip.initialConfig.setRotationDegrees(90)

Thanks in advance for your help!