Sure!

The following program is from my side

from depthai_sdk import OakCamera

import cv2, numpy as np

from queue import Queue

from typing import Tuple

class Face:

def __init__(self) -> None:

pass

def get_bbox(self, packet):

for i in packet.detections:

pt1, pt2 = i.bbox.denormalize(packet.frame.shape)

print(f"img shape from me: {packet.frame.shape}")

print(f"denormalized points from me: {pt1,pt2}")

print(f"Points from me: {i.bbox}")

print("________________________________________")

# cv2.imshow("frame", packet.frame)

def start(self):

with OakCamera() as oak:

color = oak.create_camera('color')

nn = oak.create_nn('face-detection-retail-0004', color)

oak.visualize([nn.out.main], fps=True)

oak.callback(nn, callback=self.get_bbox)

oak.start(blocking=True)

f = Face()

f.start()

I am currently printing the image shape, as well as the denormalized and normalized points in the callback function

I noticed the coordinates returned back were inaccurate when I tried to draw the bounding box myself in the callback function.

However, the bounding box from the visualizer look correct so I printed the same information from depthai_sdk/visualize/visualization_helper.py in the draw_stylized_bbox function.

def draw_stylized_bbox(img: np.ndarray, obj: VisBoundingBox) -> None:

"""

Draw a stylized bounding box. The style is either passed as an argument or defined in the config.

Args:

img: Image to draw on.

obj: Bounding box to draw.

"""

pt1, pt2 = obj.bbox.denormalize(img.shape)

print(f"image shape from visualizer helper: {img.shape}")

print(f"denormalized points from visualizer helper {pt1, pt2}")

print(f"Points from visualizer helper: {obj.bbox}")

box_w = pt2[0] - pt1[0]

box_h = pt2[1] - pt1[1]

line_width = int(box_w * obj.config.detection.line_width) // 2

line_height = int(box_h * obj.config.detection.line_height) // 2

roundness = int(obj.config.detection.box_roundness)

bbox_style = obj.bbox_style or obj.config.detection.bbox_style

alpha = obj.config.detection.fill_transparency

if bbox_style == BboxStyle.RECTANGLE:

draw_bbox(img, pt1, pt2,

obj.color, obj.thickness, 0,

line_width=0, line_height=0, alpha=alpha)

elif bbox_style == BboxStyle.CORNERS:

draw_bbox(img, pt1, pt2,

obj.color, obj.thickness, 0,

line_width=line_width, line_height=line_height, alpha=alpha)

elif bbox_style == BboxStyle.ROUNDED_RECTANGLE:

draw_bbox(img, pt1, pt2,

obj.color, obj.thickness, roundness,

line_width=0, line_height=0, alpha=alpha)

elif bbox_style == BboxStyle.ROUNDED_CORNERS:

draw_bbox(img, pt1, pt2,

obj.color, obj.thickness, roundness,

line_width=line_width, line_height=line_height, alpha=alpha)

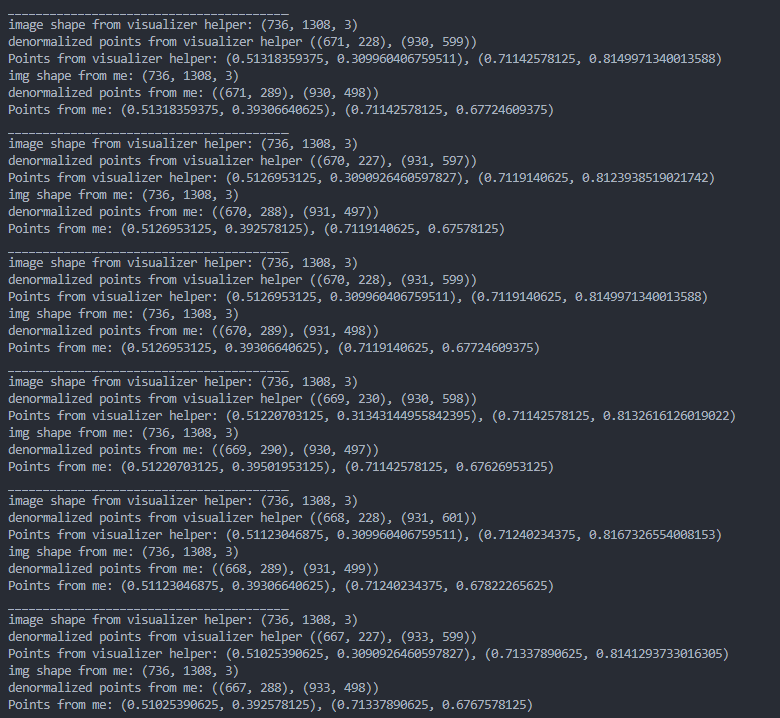

The following is the output:

As can be seen, the x coordinates are identical but the y coordinates are different. Depending on where the face is tracked, the discrepancy in the y values can be significant.