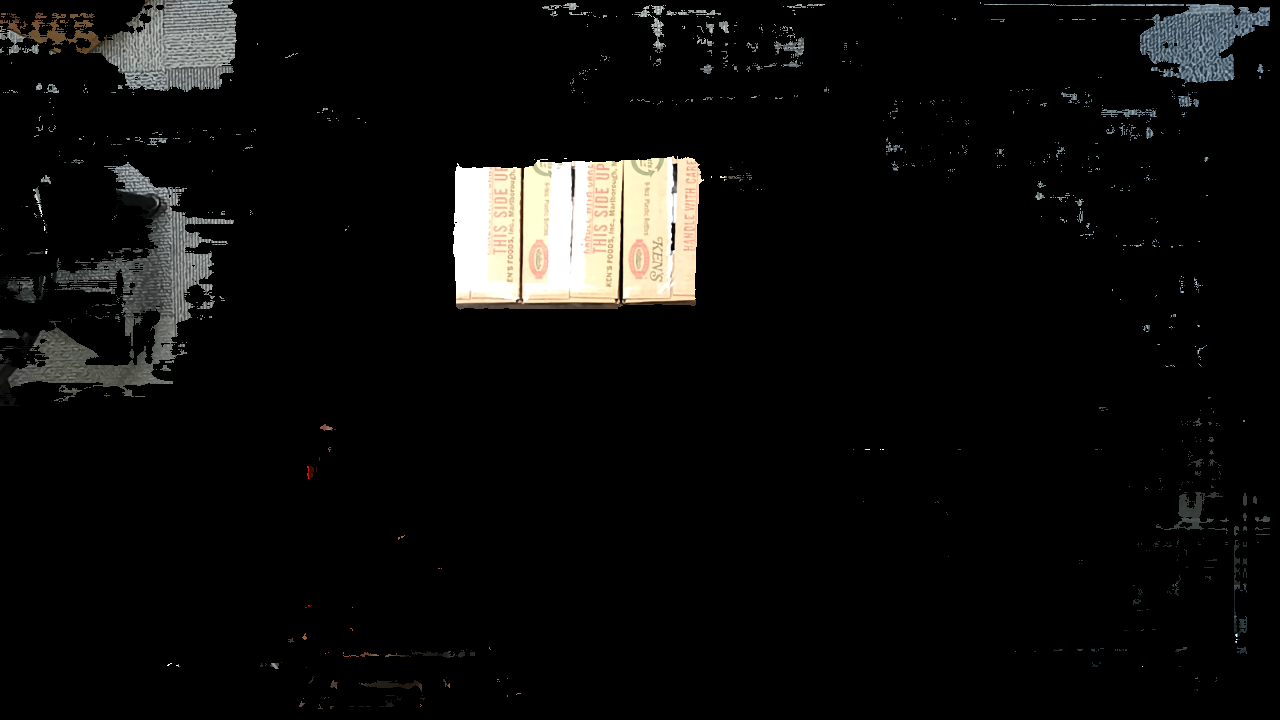

I am working on finding the depth value of each the (x, y) values of each pixel in the depth image. Then, recreating the RGB image that has color only in areas where the pixel's depth values are within a specified range. The rest of the pixels in the recreated image are turned black. I am doing this to accurately filter out the background and draw contours on the newly created 2D image. However, the depth values associated with each pixel seem to be inaccurate.

When I have the depth set to the correct distance portions of the boxes are cut off even though the depth should be the same for the entire box. When I increase the depth of the seen the floor is also shown, which defeats the purpose of the method I am trying. If I set the depth range narrower the portions of the boxes seen before are blacked out while the other portions are shown. I am confused why the entire box is not be detected at the same depth from the cameras. Also, there is noise from the floor in the surrounding area. Is there any way to tune the program to remove this?