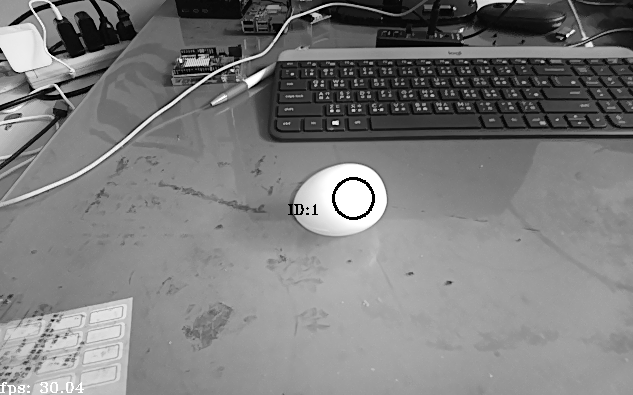

I want to use Yolo for object tracking , After i refer to example , the trackletsData is empty, else part is run correctly ,please help me figure out where the problem is

import cv2

import depthai as dai

import numpy as np

import time

# Specify the path to your custom model weights

model_weights_path = r"C:\catdog320.blob"

# Load model weights using dai.OpenVINO.Blob

custom_model_blob = dai.OpenVINO.Blob(model_weights_path)

numClasses = 80

dim = next(iter(custom_model_blob.networkInputs.values())).dims

output_name, output_tenser = next(iter(custom_model_blob.networkOutputs.items()))

numClasses = output_tenser.dims[2] - 5

# Create pipeline

pipeline = dai.Pipeline()

res = dai.MonoCameraProperties.SensorResolution.THE_400_P

# Define camera and output

monoL = pipeline.create(dai.node.MonoCamera)

monoL.setResolution(res)

monoL.setFps(30)

manip = pipeline.create(dai.node.ImageManip)

manipOut = pipeline.create(dai.node.XLinkOut)

detectionNetwork = pipeline.create(dai.node.YoloDetectionNetwork)

monoL.setNumFramesPool(24)

xoutNN = pipeline.create(dai.node.XLinkOut)

manipOut.setStreamName('flood-left')

xoutNN.setStreamName("nn")

# Create object tracker

objectTracker = pipeline.create(dai.node.ObjectTracker)

# Create output queue for tracker

xoutTracker = pipeline.create(dai.node.XLinkOut)

xoutTracker.setStreamName("tracklets")

# Script node for frame routing and IR dot/flood alternate

script = pipeline.create(dai.node.Script)

script.setProcessor(dai.ProcessorType.LEON_CSS)

script.setScript("""

floodBright = 0.1

node.warn(f'IR drivers detected: {str(Device.getIrDrivers())}')

while True:

event = node.io['event'].get()

Device.setIrFloodLightIntensity(floodBright)

frameL = node.io['frameL'].get()

node.io['floodL'].send(frameL)

""")

# Model-specific settings

detectionNetwork.setBlob(custom_model_blob)

detectionNetwork.setConfidenceThreshold(0.7)

# YOLO-specific parameters

detectionNetwork.setNumClasses(numClasses)

detectionNetwork.setCoordinateSize(4)

detectionNetwork.setAnchors([])

detectionNetwork.setAnchorMasks({})

detectionNetwork.setIouThreshold(0.5)

detectionNetwork.input.setBlocking(False)

# Convert the grayscale frame into the nn-acceptable form

manip.initialConfig.setResize(320, 320)

# The NN model expects BGR input. By default ImageManip output type would be same as input (gray in this case)

manip.initialConfig.setFrameType(dai.ImgFrame.Type.BGR888p)

# Link nodes

monoL.out.link(manip.inputImage)

manip.out.link(detectionNetwork.input)

detectionNetwork.out.link(xoutNN.input)

monoL.frameEvent.link(script.inputs['event'])

monoL.out.link(script.inputs['frameL'])

script.outputs['floodL'].link(manipOut.input)

# Link tracker

detectionNetwork.out.link(objectTracker.inputDetections)

objectTracker.out.link(xoutTracker.input)

frame_count = 0

midpoints = []

# Connect to device and start pipeline

with dai.Device(pipeline) as device:

startTime = time.monotonic()

counter = 0

fps = 0

frame = None

qRight = device.getOutputQueue("flood-left", maxSize=4, blocking=False)

qDet = device.getOutputQueue("nn", maxSize=4, blocking=False)

qTracker = device.getOutputQueue("tracklets", maxSize=4, blocking=False)

detections = []

def frameNorm(frame, bbox):

normVals = np.full(len(bbox), frame.shape[0])

normVals[::2] = frame.shape[1]

return (np.clip(np.array(bbox), 0, 1) * normVals).astype(int)

while True:

counter+=1

current_time1 = time.monotonic()

if (current_time1 - startTime) > 1 :

fps = counter / (current_time1 - startTime)

counter = 0

startTime = current_time1

imageQueueData = qRight.tryGet()

detectQueueData = qDet.tryGet()

trackletsQueueData = qTracker.tryGet()

current_time = time.localtime()

time_text = f"{str(current_time.tm_mon).zfill(2)}/{str(current_time.tm_mday).zfill(2)} : {str(current_time.tm_hour).zfill(2)} : {str(current_time.tm_min).zfill(2)} : {str(current_time.tm_sec).zfill(2)}"

if imageQueueData is not None:

frame = imageQueueData.getCvFrame()

frame = cv2.cvtColor(frame, cv2.COLOR_GRAY2BGR)

cv2.putText(frame, time_text, (420, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 255, 0), 2)

if detectQueueData is not None:

detections = detectQueueData.detections

if frame is not None:

cv2.putText(frame, "fps: {:.2f}".format(fps), (2, frame.shape[0] - 4), cv2.FONT_HERSHEY_TRIPLEX, 0.5, (255, 255, 0), 1)

for detection in detections:

bbox = frameNorm(frame, (detection.xmin, detection.ymin, detection.xmax, detection.ymax))

center = (int((bbox[0] + bbox[2]) / 2), int((bbox[1] + bbox[3]) / 2))

cv2.circle(frame, (center[0], center[1]), 25, (0, 255, 0), 2)

if trackletsQueueData is not None:

tracklets = trackletsQueueData.tracklets

for tracklet in tracklets:

roi = tracklet.roi.denormalize(frame.shape[1], frame.shape[0])

xmin, ymin, xmax, ymax = int(roi.topLeft().x), int(roi.topLeft().y), int(roi.bottomRight().x), int(roi.bottomRight().y)

id = tracklet.id

label = tracklet.label

cv2.putText(frame, f"ID: {id}", (xmin, ymin - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 1)

cv2.rectangle(frame, (xmin, ymin), (xmax, ymax), (0, 255, 0), 2)

cv2.putText(frame, f"Detections: {len(detections)}", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (255, 255, 0), 2)

cv2.imshow("ir detect", frame)

if cv2.waitKey(1) == ord("q"):

break

cv2.destroyAllWindows()

thanks,

Li