Hello everyone, I would like to report that it happens to me that a model imported from PyTorch produces an output with wrong dimensions after it is deployed on DepthAI

Below I report the steps that led me to this issue. First I export a model from PyTorch to an ONNX file. The model is a simple resnet that has an output shape of 1000 elements because it predicts probabilities of an image belonging to the 1000 classes of ImageNet dataset.

import torch

import torchvision

weights = torchvision.models.ResNet50_Weights.DEFAULT

model = torchvision.models.resnet50(weights=weights).eval()

dummy_input = torch.randn(1, 3, 224, 224)

model_name = "resnet50"

torch.onnx.export(model, dummy_input, "resnet50.onnx", do_constant_folding = True, input_names = ["input"], output_names = ["output"], opset_version = 12)

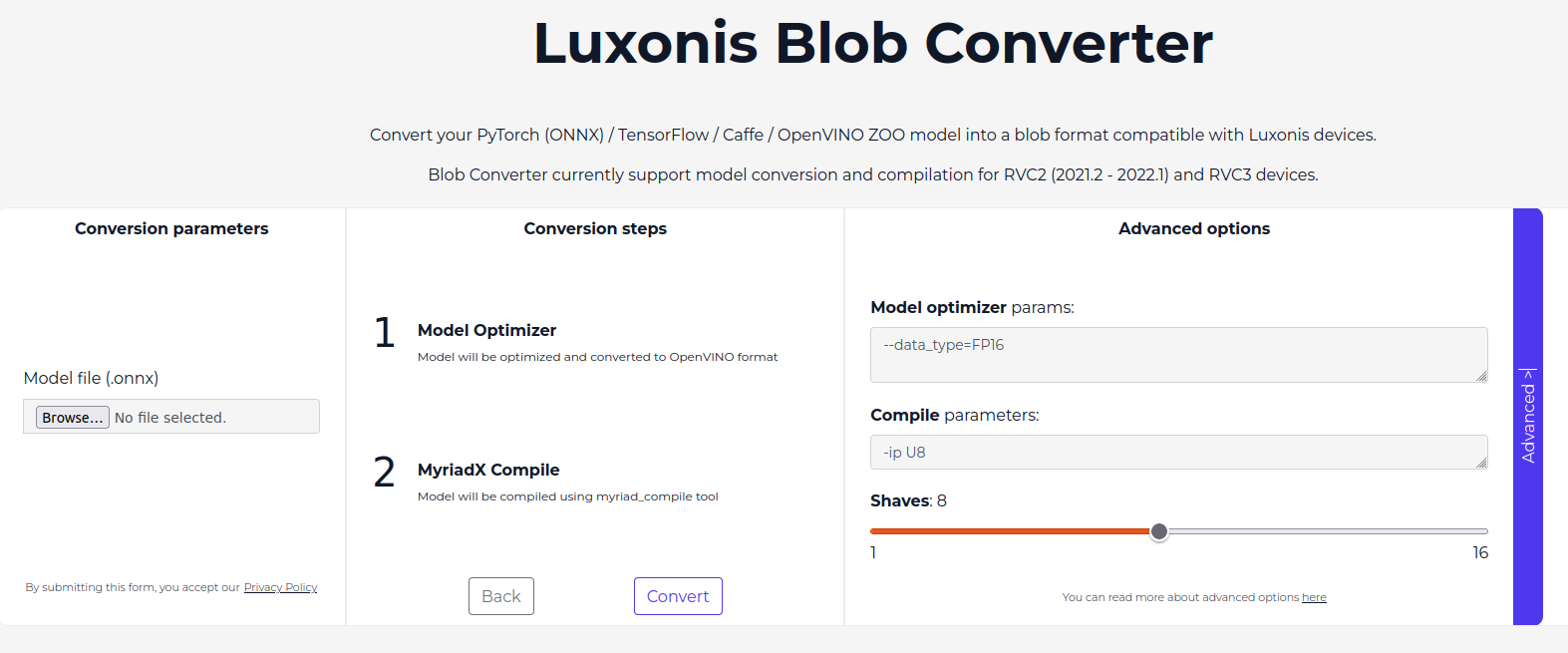

I use ONNX simplifier to simplify the mode, then at this point I convert the model to MyriadX blob through the Luxonis blob converter (I ignore the normalization parameters because they don't matter now).

I deploy the model in depthAI with the following code, however the model produces an output of 2000 elements instead of 1000

import cv2

import depthai as dai

import numpy as np

#nodes

pipeline = dai.Pipeline()

camRgb = pipeline.create(dai.node.ColorCamera)

nnOut = pipeline.create(dai.node.XLinkOut)

nn = pipeline.create(dai.node.NeuralNetwork)

#properties

nn.setBlobPath("densenet121.blob")

nnOut.setStreamName("nn")

camRgb.setPreviewSize(224, 224)

camRgb.setInterleaved(False) ##false for channelfirst of torch

nn.input.setBlocking(False)

#links

camRgb.preview.link(nn.input)

nn.out.link(nnOut.input)

with dai.Device(pipeline) as device:

qDet = device.getOutputQueue(name="nn", maxSize=4, blocking=False)

inDet = qDet.get()

print(inDet.getData())

inDet.getData() should be an array of 1000 elements but it has shape (2000,) instead