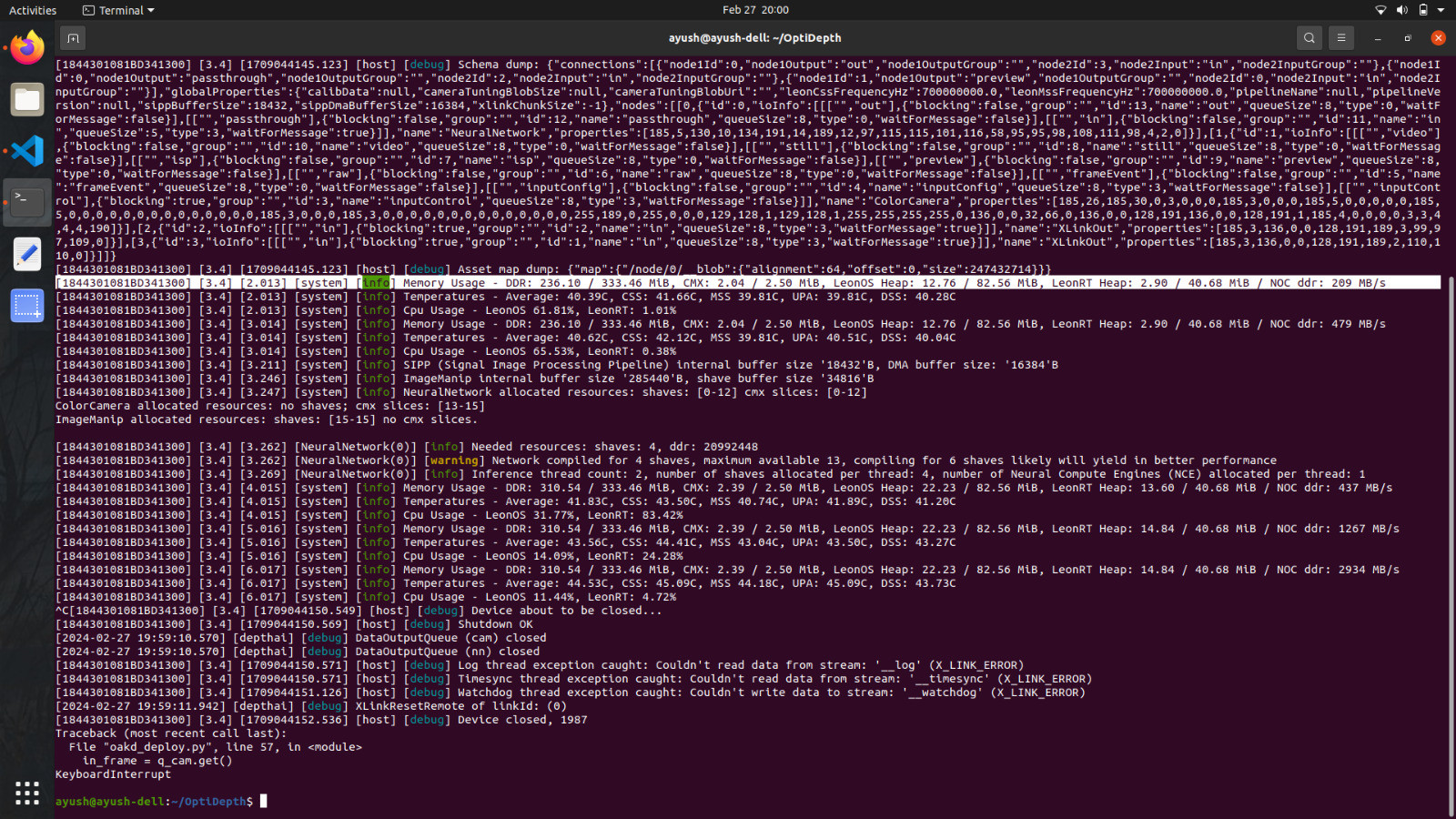

Is there any limit to blob size that can be deployed on Oak-D Pro? Using a pipeline configuration I can deploy a blob of size ~250 MB but I get an error for one with size 490 MB. Both the blobs are custom cnn models. Or Is it an issue related to architecture of second model? I have used blob converter to generate the blob with default configuration from onnx

terminate called after throwing an instance of 'dai::XLinkWriteError'

what(): Couldn't write data to stream: '__stream_asset_storage' (X_LINK_ERROR)

terminate called recursively

Aborted (core dumped