While doing some tests I noticed that if I try to rectify the raw left/right images of my OAK-D camera using OpenCV leads to a different result than the rectified streams from the StereoDepth node. I am using the camera's factory calibration parameters extracted using DepthAI, so I would assume that the results should be the same. Below you can see, in this order, the raw left image, the left image rectified by DepthAI, and the left image rectified by me using OpenCV.

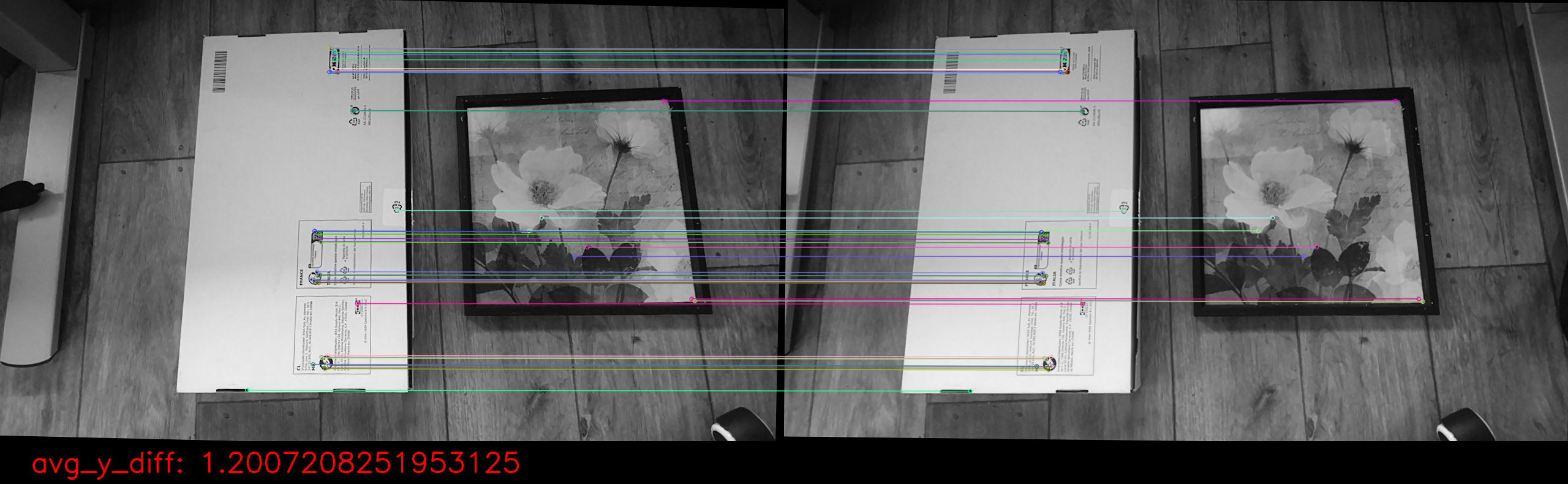

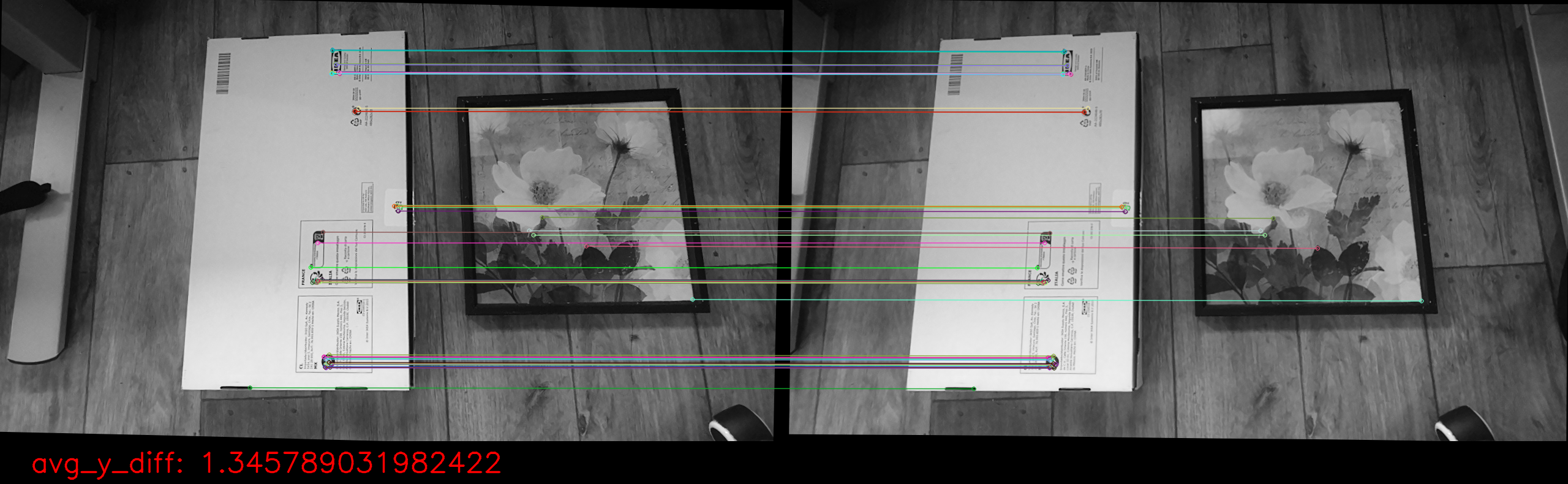

To evaluate the quality of the rectification, I extracted ORB features on each left/right image pair and calculated the average y difference between left/right keypoints of the top 50 matches. Below I plot these top 50 matches and show the average y-difference for the raw left-right image pair, the left-right image pair rectified by DepthAI's StereoDepth node, and the left-right image pair rectified by me using OpenCV. DepthAI's rectification seems 0.145 pixels more accurate than my own

Here's the code I'm using the get the images and the OAK-D's calibration data:

#!/usr/bin/env python3

import cv2, json

import numpy as np

import depthai as dai

resolution = (1280, 720)

def save_intrinsics(device):

calibData = device.readCalibration()

# Get intrinsics for the left and right camera

M_left = calibData.getCameraIntrinsics(dai.CameraBoardSocket.CAM_B, dai.Size2f(resolution[0], resolution[1]))

M_right = calibData.getCameraIntrinsics(dai.CameraBoardSocket.CAM_C, dai.Size2f(resolution[0], resolution[1]))

# Get distortion coefficients for the left and right camera

D_left = calibData.getDistortionCoefficients(dai.CameraBoardSocket.CAM_B)

D_right = calibData.getDistortionCoefficients(dai.CameraBoardSocket.CAM_C)

# Get extrinsics for the left and right camera with respect to color camera

lr_extrinsics = np.array(calibData.getCameraExtrinsics(dai.CameraBoardSocket.CAM_B, dai.CameraBoardSocket.CAM_C))

lr_extrinsics[:3, 3] /= 100 # Convert translation from cm to m

calib_data_dict = {

"resolution": resolution,

"M_left": M_left,

"D_left": D_left,

"M_right": M_right,

"D_right": D_right,

"lr_extrinsics": lr_extrinsics.tolist(),

}

# Save calib_data_dict to manufacturer_calib_data.json file

with open("calib_data.json", "w") as file:

json.dump(calib_data_dict, file, indent=4)

# Create pipeline

pipeline = dai.Pipeline()

# Define the pipeline nodes

camLeft = pipeline.createMonoCamera()

camRight = pipeline.createMonoCamera()

camStereo = pipeline.createStereoDepth()

# Define output streams

xoutLeft = pipeline.createXLinkOut()

xoutLeft.setStreamName("leftRaw")

xoutRight = pipeline.createXLinkOut()

xoutRight.setStreamName("rightRaw")

xoutLeftRect = pipeline.createXLinkOut()

xoutLeftRect.setStreamName("leftRect")

xoutRightRect = pipeline.createXLinkOut()

xoutRightRect.setStreamName("rightRect")

# Define depth camera properties

camLeft.setBoardSocket(dai.CameraBoardSocket.CAM_B)

camLeft.setResolution(dai.MonoCameraProperties.SensorResolution.THE_720_P)

camLeft.setFps(5)

camRight.setBoardSocket(dai.CameraBoardSocket.CAM_C)

camRight.setResolution(dai.MonoCameraProperties.SensorResolution.THE_720_P)

camRight.setFps(5)

# Linking

camLeft.out.link(xoutLeft.input)

camRight.out.link(xoutRight.input)

camLeft.out.link(camStereo.left)

camRight.out.link(camStereo.right)

camStereo.rectifiedLeft.link(xoutLeftRect.input)

camStereo.rectifiedRight.link(xoutRightRect.input)

# Connect to device and start pipeline

latestPacket = {}

latestPacket["leftRaw"] = None

latestPacket["rightRaw"] = None

latestPacket["leftRect"] = None

latestPacket["rightRect"] = None

stored_images = set(latestPacket.keys())

leftWindowName = "left"

rightWindowName = "right"

cv2.namedWindow(leftWindowName)

cv2.namedWindow(rightWindowName)

idx = -1

with dai.Device(pipeline) as device:

save_intrinsics(device)

while True:

for key in latestPacket.keys():

latestPacket[key] = None

queueEvents = device.getQueueEvents(list(latestPacket.keys()))

for queueName in queueEvents:

packets = device.getOutputQueue(queueName).tryGetAll()

if len(packets) > 0:

latestPacket[queueName] = packets[-1]

if latestPacket["leftRaw"] is not None:

frameLeftRaw = latestPacket["leftRaw"].getCvFrame()

cv2.imshow(leftWindowName, frameLeftRaw)

if "leftRaw" not in stored_images:

stored_images.add("leftRaw")

cv2.imwrite(f"leftRaw_{idx:02}.png", frameLeftRaw)

print("Saved left image")

if latestPacket["rightRaw"] is not None:

frameRightRaw = latestPacket["rightRaw"].getCvFrame()

cv2.imshow(rightWindowName, frameRightRaw)

if "rightRaw" not in stored_images:

stored_images.add("rightRaw")

cv2.imwrite(f"rightRaw_{idx:02}.png", frameRightRaw)

print("Saved right image")

if latestPacket["leftRect"] is not None:

frameLeftRect = latestPacket["leftRect"].getCvFrame()

if "leftRect" not in stored_images:

stored_images.add("leftRect")

cv2.imwrite(f"leftRect_{idx:02}.png", frameLeftRect)

print("Saved left image")

if latestPacket["rightRect"] is not None:

frameRightRect = latestPacket["rightRect"].getCvFrame()

if "rightRect" not in stored_images:

stored_images.add("rightRect")

cv2.imwrite(f"rightRect_{idx:02}.png", frameRightRect)

print("Saved right image")

if cv2.waitKey(1) == ord('r'):

stored_images = set()

idx += 1

if cv2.waitKey(1) == ord('q'):

cv2.destroyAllWindows()

break

Here's the code I'm using the perform the rectification and evaluate it:

import json

import numpy as np

import matplotlib.pyplot as plt

import cv2

from glob import glob

def rectify_images(img1, img2, mtx1, dist1, mtx2, dist2, T):

R = T[:3, :3]

t = T[:3, 3]

R1, R2, P1, P2, Q, _, _ = cv2.stereoRectify(mtx1, dist1, mtx2, dist2, img1.shape[:2][::-1], R, t)

map1x, map1y = cv2.initUndistortRectifyMap(mtx1, dist1, R1, P1, img1.shape[:2][::-1], cv2.CV_32FC1)

map2x, map2y = cv2.initUndistortRectifyMap(mtx2, dist2, R2, P2, img2.shape[:2][::-1], cv2.CV_32FC1)

img1_rectified = cv2.remap(img1, map1x, map1y, cv2.INTER_LINEAR)

img2_rectified = cv2.remap(img2, map2x, map2y, cv2.INTER_LINEAR)

return img1_rectified, img2_rectified

def evaluate_registration_with_feature_matching(img_left, img_right, outfile = None, n_matches=50):

# Create ORB object

orb = cv2.ORB_create()

# Find keypoints and descriptors in the left image

kp1, des1 = orb.detectAndCompute(img_left, None)

# Find keypoints and descriptors in the right image

kp2, des2 = orb.detectAndCompute(img_right, None)

# Create matcher

matcher = cv2.BFMatcher()

# Match features

matches = matcher.match(des1, des2)

# Sort matches by distance

matches = sorted(matches, key=lambda x: x.distance)

# Draw matches

img_matches = cv2.drawMatches(img_left, kp1, img_right, kp2, matches[:n_matches], None, flags=cv2.DrawMatchesFlags_NOT_DRAW_SINGLE_POINTS)

avg_y_diff = 0

for match in matches[:n_matches]:

avg_y_diff += np.abs(kp1[match.queryIdx].pt[1] - kp2[match.trainIdx].pt[1])

avg_y_diff /= n_matches

black_patch = np.zeros((70, img_matches.shape[1], 3), dtype=np.uint8)

out_img = np.concatenate((img_matches, black_patch), axis=0)

font = cv2.FONT_HERSHEY_SIMPLEX

org = (50, img_matches.shape[0]+50)

fontScale = 1.5

color = (255, 0, 0)

thickness = 2

out_img = cv2.putText(out_img, f'avg_y_diff: {avg_y_diff}', org, font,

fontScale, color, thickness, cv2.LINE_AA)

if outfile is None:

plt.figure(figsize=(20, 15))

plt.imshow(out_img)

plt.axis('off')

plt.show()

else:

out_img = cv2.cvtColor(out_img, cv2.COLOR_BGR2RGB)

cv2.imwrite(outfile, out_img)

if __name__ == "__main__":

left_imgs_paths = sorted(glob("leftRaw_*.png"))

right_imgs_paths = sorted(glob("rightRaw_*.png"))

leftRect_imgs_paths = sorted(glob("leftRect_*.png"))

rightRect_imgs_paths = sorted(glob("rightRect_*.png"))

with open("calib_data.json", "r") as file:

calib_data = json.load(file)

img_idx = 0

img_leftRaw = cv2.imread(left_imgs_paths[img_idx])

img_rightRaw = cv2.imread(right_imgs_paths[img_idx])

img_leftRect = cv2.imread(leftRect_imgs_paths[img_idx])

img_rightRect = cv2.imread(rightRect_imgs_paths[img_idx])

img_leftRect_ours, img_rightRect_ours = rectify_images(

img_leftRaw,

img_rightRaw,

np.array(calib_data["M_left"]),

np.array(calib_data["D_left"]),

np.array(calib_data["M_right"]),

np.array(calib_data["D_right"]),

np.array(calib_data["lr_extrinsics"]))

cv2.imwrite(f"leftRect_{img_idx:02}_ours.jpg", img_leftRect_ours)

cv2.imwrite(f"rightRect_{img_idx:02}_ours.jpg", img_rightRect_ours)

evaluate_registration_with_feature_matching(img_leftRaw, img_rightRaw, outfile = "raw.png", n_matches=50)

evaluate_registration_with_feature_matching(img_leftRect, img_rightRect, outfile = "depthai.png", n_matches=50)

evaluate_registration_with_feature_matching(img_leftRect_ours, img_rightRect_ours, outfile = "ours.png", n_matches=50)

I have also tried to perform the registration using cv2.warpPerspective, as shown in this example, but the difference in results with respect to StereoDepth's rectified streams is even bigger.

Does anyone have any insight into why I can't replicate StereoDepth's rectification results despite using what should be the same calibration parameters?