Hi DepthAI Backers!

Thanks again for all the continued support and interest in the platform.

So we've been hard at work adding a TON of DepthAI functionalities. You can track a lot of the progress in the following Github projects:

As you can see, there are a TON of features we have released since the last update. Let's highlight a few below:

RGB-Depth Alignment

We have the calibration stage working now. And future DepthAI builds (after this writing) are actually having RGB-right calibration performed. An example with semantic segmentation is shown below:

The right grayscale camera is shown on the right and the RGB is shown on the left. You can see the cameras are slightly different aspect ratios and fields of view, but the semantic segmentation is still properly applied. More details on this, and to track progress, see our Github issue on this feature here: https://github.com/luxonis/depthai/issues/284

Subpixel Capability

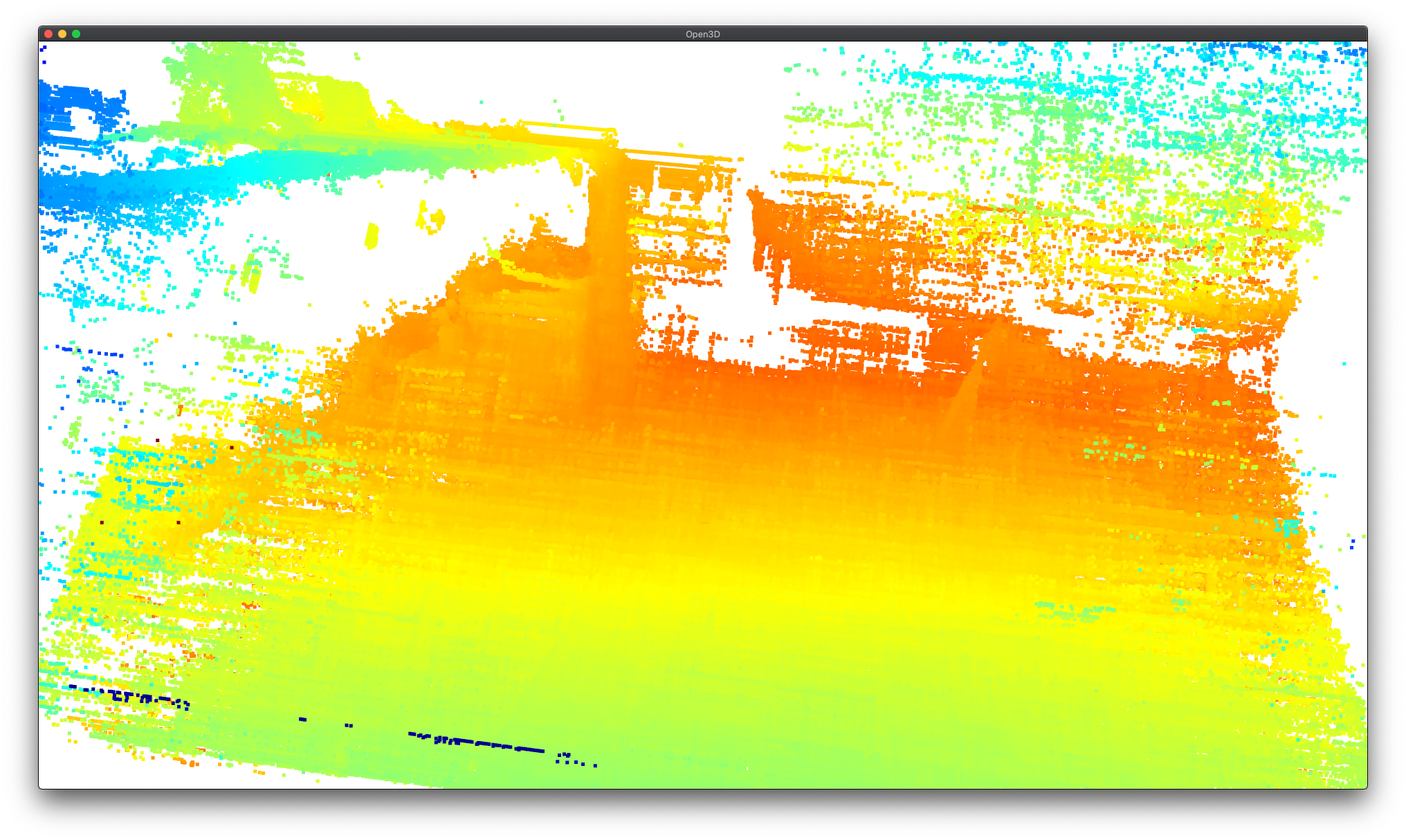

DepthAI now supports subpixel. To try it out yourself, use the example here. And see below for my quickly using this at my desk:

Host Side Depth Capability

We also now allow performing depth estimation from images sent from the host. This is very convenient for test/validation - as stored images can be used. And along with this, we now support outputting the rectified-left and rectified-right, so they can be stored and later used with DepthAI's depth engine in various CV pipelines.

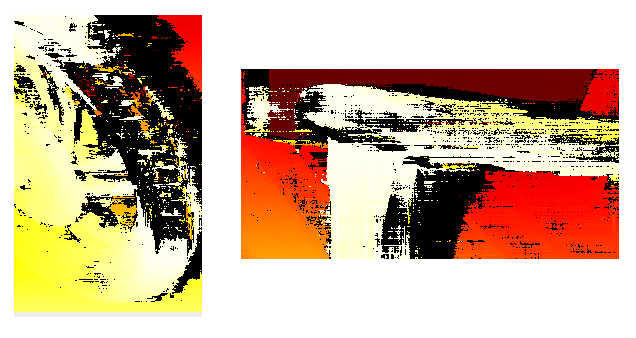

See here on how to do this with your DepthAI model. And see some examples below from the MiddleBury stereo dataset:

For the bad looking areas, these are caused by the objects being too close to the camera for the given baseline, exceeding the 96 pixels max distance for disparity matching (StereoDepth engine constraint):

These areas will be improved with extended = True, however Extended Disparity and Subpixel cannot operate both at the same time.

RGB Focus, Exposure, and Sensitivity Control

We also added the capability (and examples on how to use) manual focus, exposure, and sensitivity controls. See here for how to use these controls.

Here is an example of increasing the exposure time:

And here is setting it quite low:

It's actually fairly remarkable how well the neural network still detects me as a person even when the image is this dark.

Pure Embedded DepthAI

We mentioned in our last update (here), we mentioned that we were making a pure-embedded DepthAI.

We made it. Here's the initial concept:

And here it is working!

And here it is on a wrist to give a reference of its size:

And eProsima even got microROS running on this with DepthAI, exporting results over WiFi back to RViz:

RPi Compute Module 4

We're quite excited about this one. We're fairly close to ordering it. Some initial views in Altium below:

There's a bunch more, but we'll leave you with our recent interview with Chris Gammel at the Amp Hour!

https://theamphour.com/517-depth-and-ai-with-brandon-gilles-and-brian-weinstein/

Cheers,

Brandon & The Luxonis Team