Hi @jakaskerl, thanks for the insight !

I need to be able to stereo rectify the color stereo pair, I would like to have the same output as the stereoDepth rectified streams, but in color 🙂

FYI I have two different setups with two FFC-4Ps:

- Two OV9282 monochrome cameras (wide)

- Two IMX296 with fisheye lenses

Trying to replicate the output of the stereoDepth rectified streams, I modified the get_mesh() function given here

def get_mesh(cam_name, resolution, fisheye, name_to_socket):

left_socket = get_socket_from_name("left", name_to_socket)

right_socket = get_socket_from_name("right", name_to_socket)

calib = dai.Device().readCalibration()

left_K = np.array(

calib.getCameraIntrinsics(left_socket, resolution[0], resolution[1])

)

left_D = np.array(calib.getDistortionCoefficients(left_socket))

# R1 = np.array(calib.getStereoLeftRectificationRotation())

right_K = np.array(

calib.getCameraIntrinsics(right_socket, resolution[0], resolution[1])

)

right_D = np.array(calib.getDistortionCoefficients(right_socket))

# R2 = np.array(calib.getStereoRightRectificationRotation())

extrinsics = np.array(calib.getCameraExtrinsics(left_socket, right_socket))

if fisheye:

R1, R2, P1, P2, Q = cv2.fisheye.stereoRectify(

left_K,

left_D,

right_K,

right_D,

resolution,

extrinsics[:3, :3],

extrinsics[:3, 3],

flags=0,

)

mapXL, mapYL = cv2.fisheye.initUndistortRectifyMap(

left_K, left_D, R1, P1, resolution, cv2.CV_32FC1

)

mapXR, mapYR = cv2.fisheye.initUndistortRectifyMap(

right_K, right_D, R2, P2, resolution, cv2.CV_32FC1

)

else:

R1, R2, P1, P2, Q, _, _ = cv2.stereoRectify(

left_K,

left_D,

right_K,

right_D,

resolution,

extrinsics[:3, :3],

extrinsics[:3, 3],

flags=0,

)

mapXL, mapYL = cv2.initUndistortRectifyMap(

left_K, left_D, R1, P1, resolution, cv2.CV_32FC1

)

mapXR, mapYR = cv2.initUndistortRectifyMap(

right_K, right_D, R2, P2, resolution, cv2.CV_32FC1

)

mapX = mapXL if cam_name == "left" else mapXR

mapY = mapYL if cam_name == "left" else mapYR

meshCellSize = 16

mesh0 = []

# Creates subsampled mesh which will be loaded on to device to undistort the image

for y in range(mapX.shape[0] + 1): # iterating over height of the image

if y % meshCellSize == 0:

rowLeft = []

for x in range(mapX.shape[1]): # iterating over width of the image

if x % meshCellSize == 0:

if y == mapX.shape[0] and x == mapX.shape[1]:

rowLeft.append(mapX[y - 1, x - 1])

rowLeft.append(mapY[y - 1, x - 1])

elif y == mapX.shape[0]:

rowLeft.append(mapX[y - 1, x])

rowLeft.append(mapY[y - 1, x])

elif x == mapX.shape[1]:

rowLeft.append(mapX[y, x - 1])

rowLeft.append(mapY[y, x - 1])

else:

rowLeft.append(mapX[y, x])

rowLeft.append(mapY[y, x])

if (mapX.shape[1] % meshCellSize) % 2 != 0:

rowLeft.append(0)

rowLeft.append(0)

mesh0.append(rowLeft)

mesh0 = np.array(mesh0)

meshWidth = mesh0.shape[1] // 2

meshHeight = mesh0.shape[0]

mesh0.resize(meshWidth * meshHeight, 2)

mesh = list(map(tuple, mesh0))

return mesh, meshWidth, meshHeight

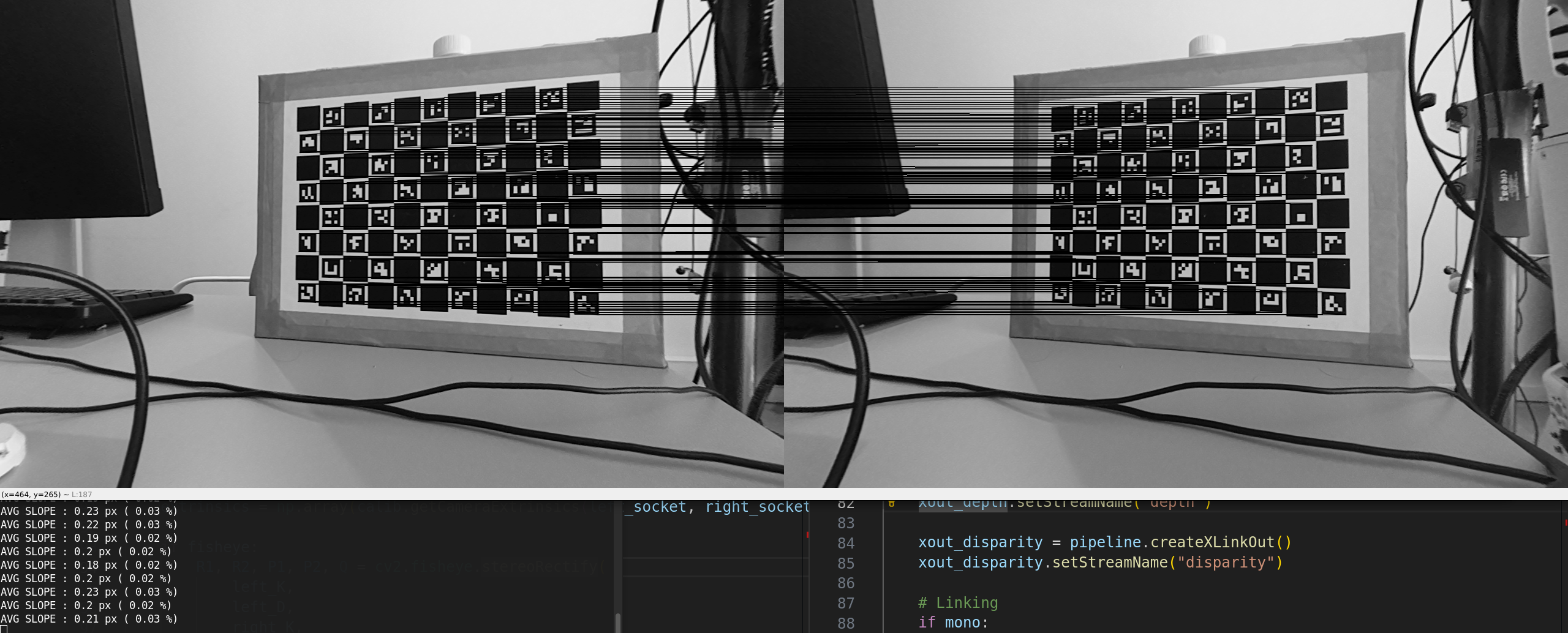

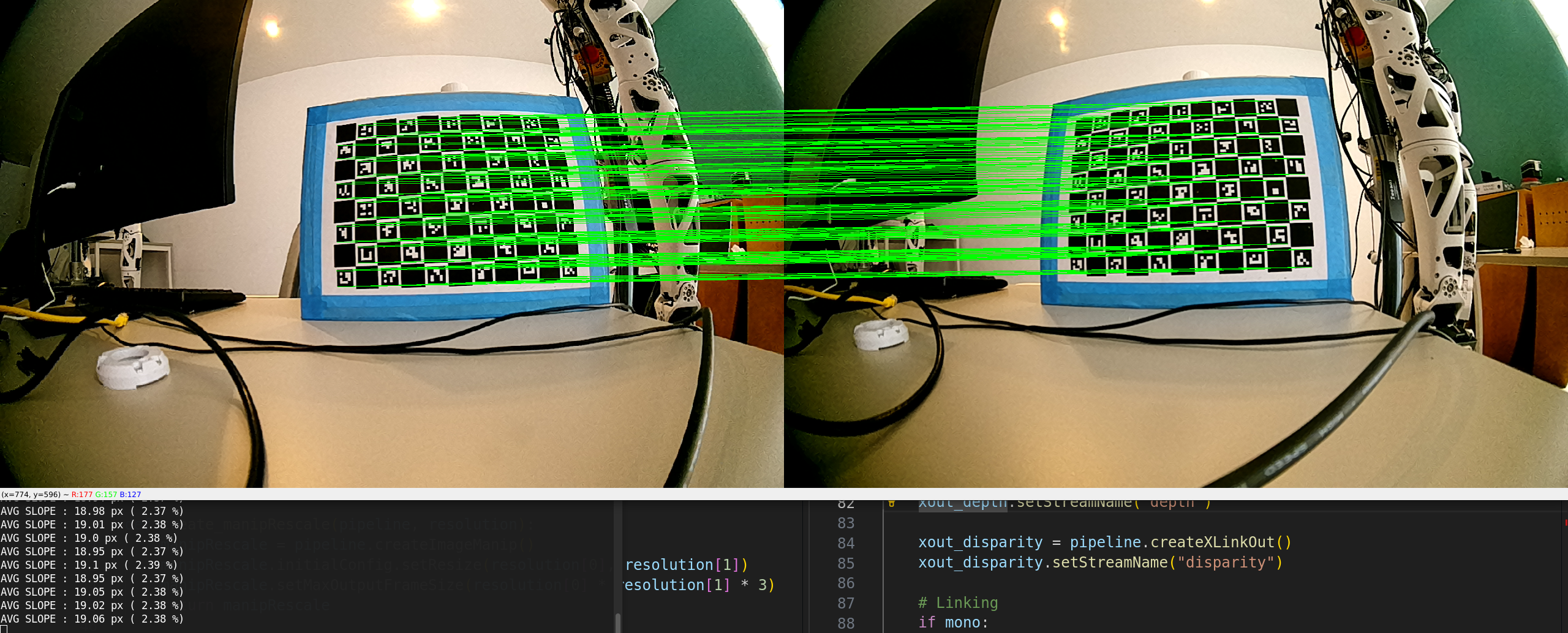

I think I am on the right track, as on the OV9282 setup I get very close results:

From stereoDepth node

With get_mesh()

You can see the image is a little bit more zoomed in coming out of the stereoDepth node, and the epilines scores are better (0.03% vs 0.1%)

This would be acceptable to me.

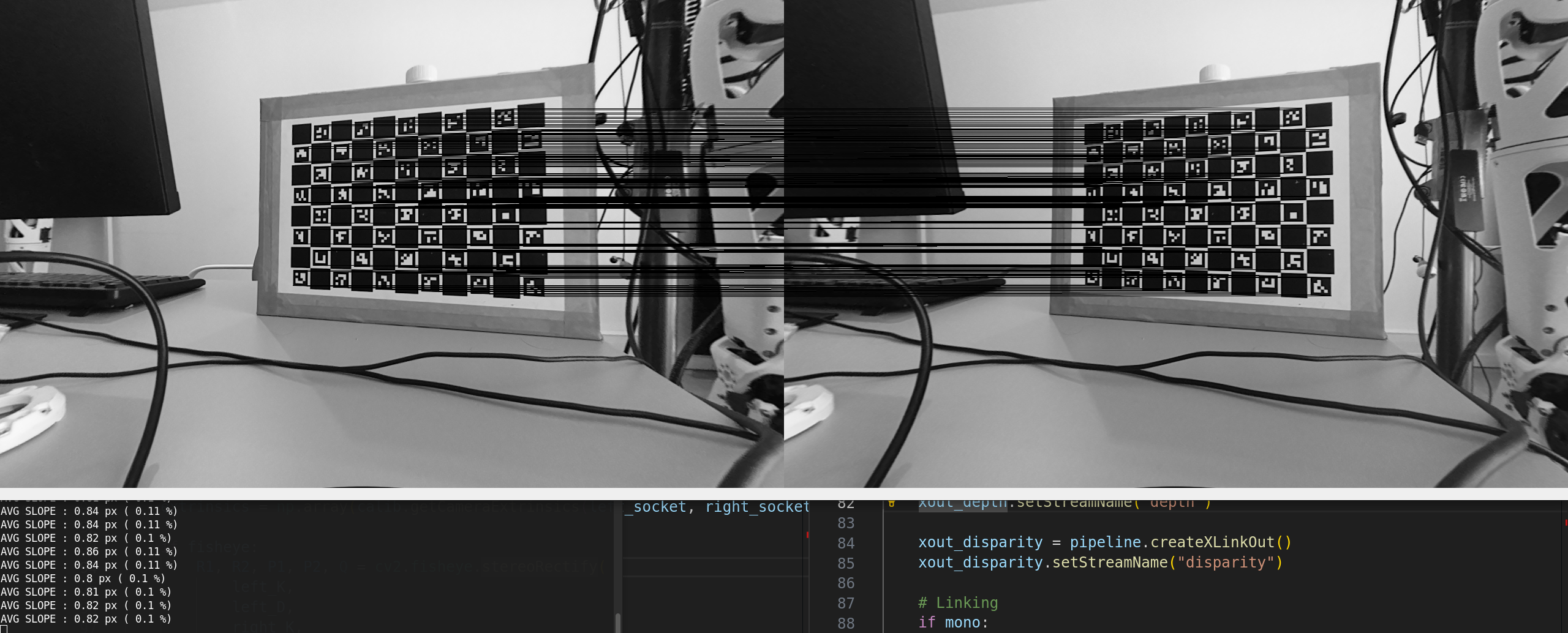

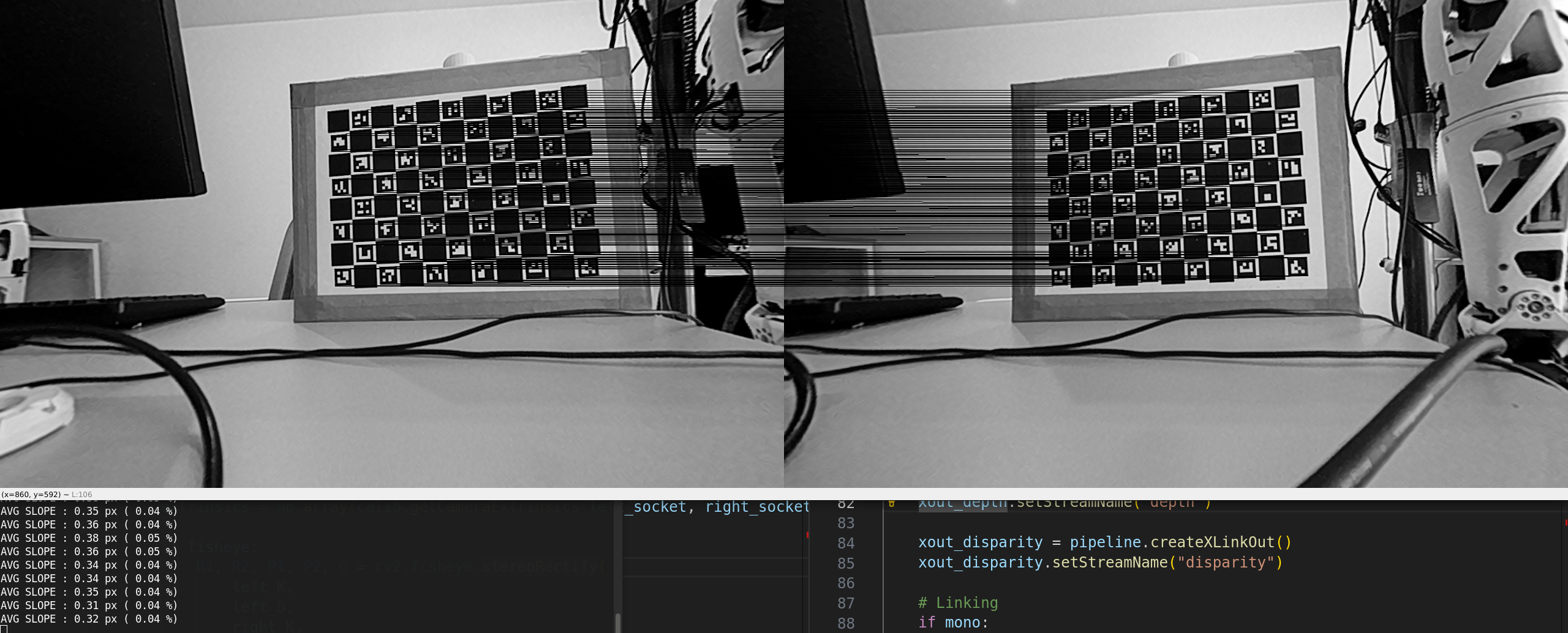

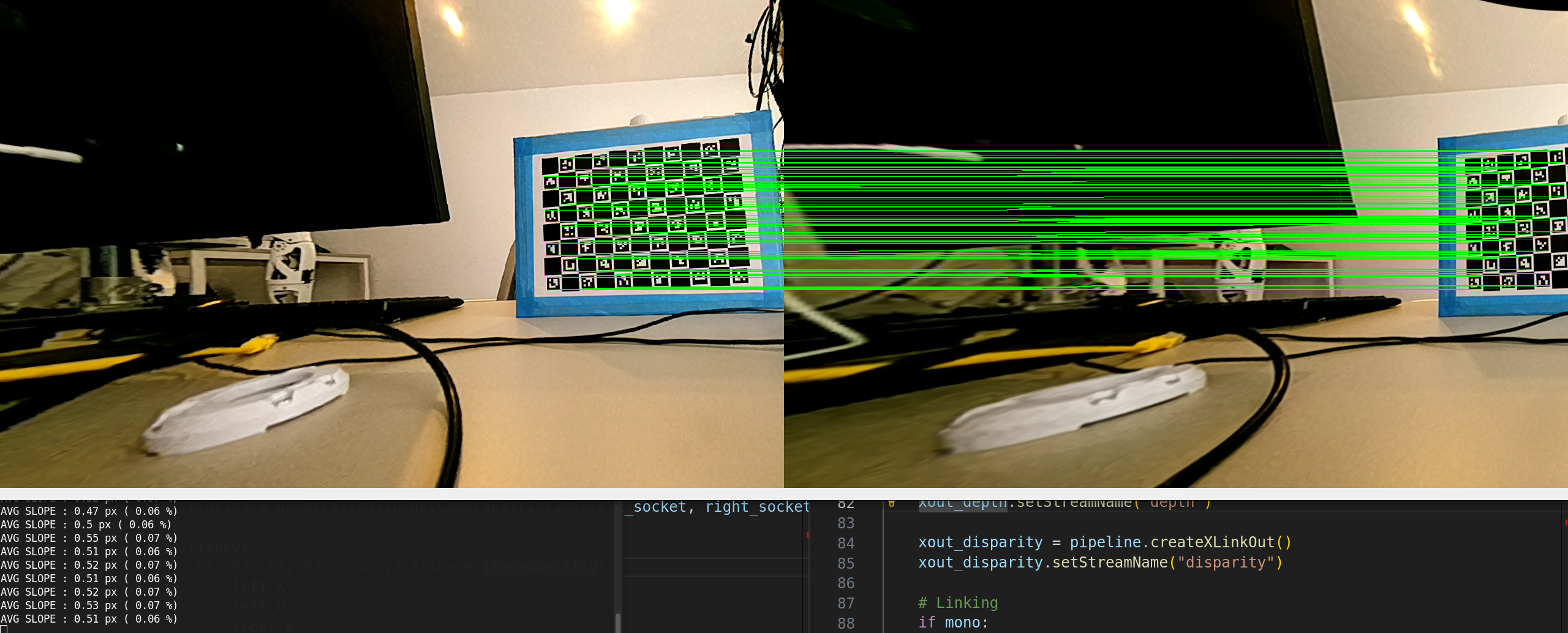

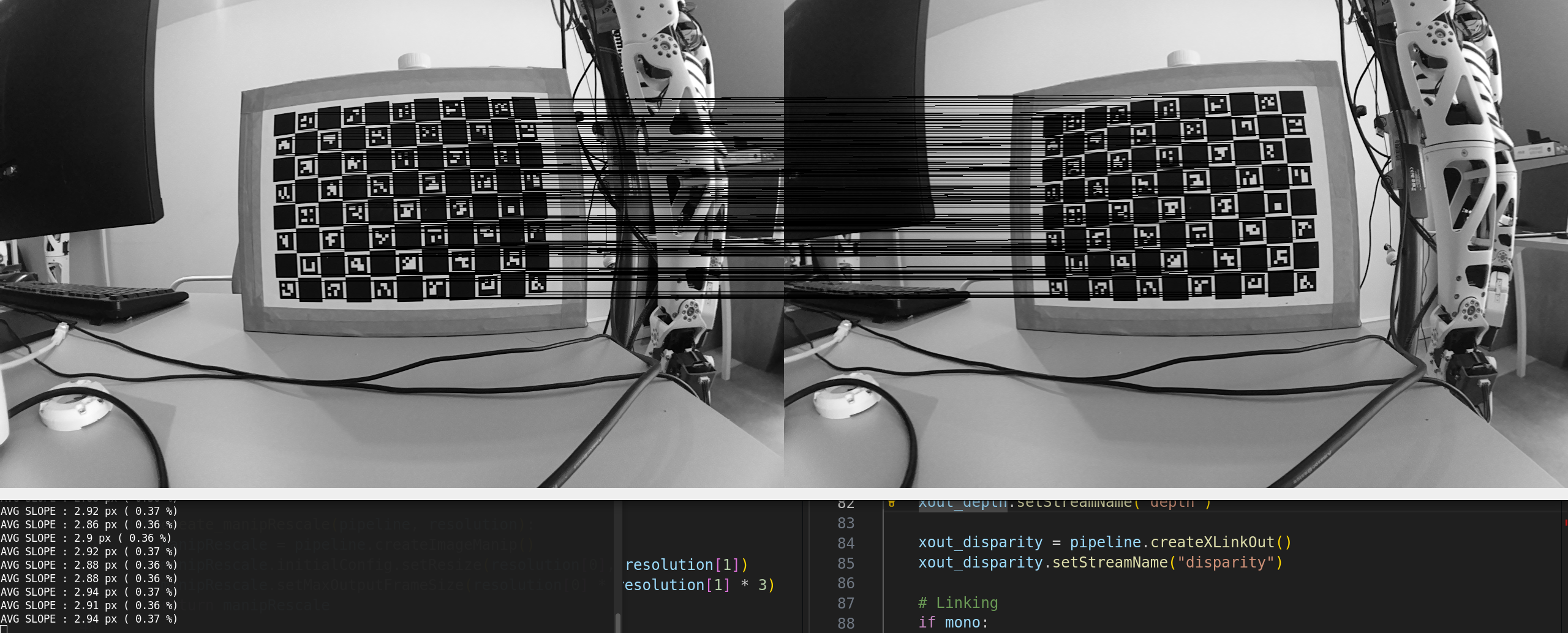

However, when checking the same things with the IMX296 color cameras with fisheye lenses, I get:

From stereoDepth node:

From get_mesh()

As you can see, both images are weirdly translated, and have large distortion, especially the image on the right.

The two setups have been calibrated with the same procedure, but they differ in two ways

The IMX296 sensors output a 1440x1080 image that I resize to (1280, 800) using an ImageManip node, while the OV9282 are natively (1280,800)

def create_manipRescale(pipeline, resolution):

manipRescale = pipeline.createImageManip()

manipRescale.initialConfig.setResize(resolution[0], resolution[1])

manipRescale.setMaxOutputFrameSize(resolution[0] * resolution[1] * 3)

return manipRescale

The lenses mounted on the IMX296 have a larger FOV than the OV9282W (below examples without undistort)

A few other questions while I am at it:

- Can https://docs.luxonis.com/projects/api/en/latest/samples/Camera/camera_undistort/#undistort-camera-stream be used to do what I want to do ? Is it mature or still experimental ? Right now I get black images with the IMX296 sensors when trying to get any output (video, preview, isp) from this node

- Would it be possible to directly get the meshes computed by the stereoDepth node ? It would be perfect, as I would just need to instantiate a StereoDepth node, get the mesh and set it as the warp mesh of my rectify ImageManip node.

Sorry, that's a lot, thanks for your time!

Antoine