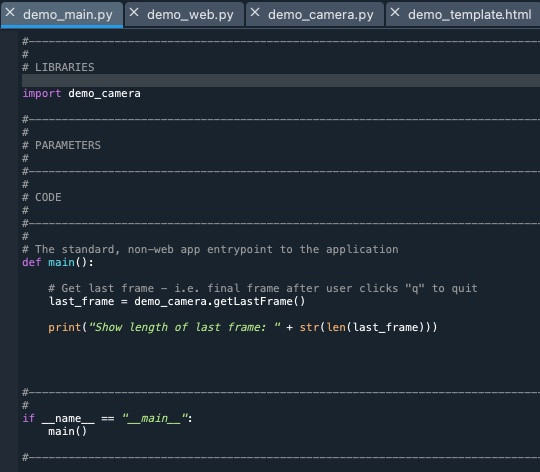

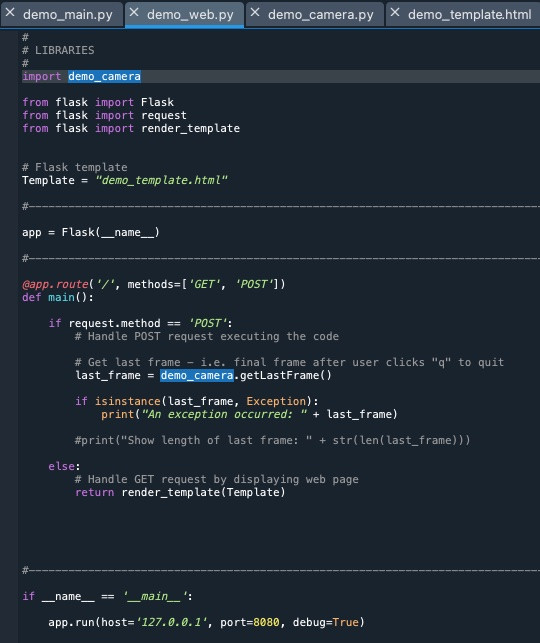

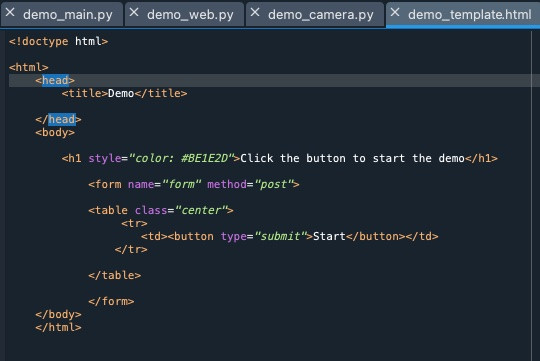

Apologies. Here are the 4 files. Oh, it says uploading files of this type are not allowed. I have tried renaming them as txt, and also zipping them them together but that does not work, so I am sending images, and the demo_camera file in its entirety.

Thanks,

Christopher

See code for demo_camera.py below🇦

# LIBRARIES

#

import numpy as np

import cv2

import depthai

import blobconverter

#

#------------------------------------------------------------------------------

#

# PARAMETERS

#

#------------------------------------------------------------------------------

#

# CODE

#

#------------------------------------------------------------------------------

# Normalise the frame/image data.

#

# Neural network inference output bounding box coordinates are

# represented as floats from <0..1> range. The images must be scaled relative

# to frame width/height

# (e.g. if image has 200px width and the nn returned x_min coordinate of 0.2,

# this means the actual (normalised) x_min coordinate is 40px),

# i.e 200 * 0.2, or a fifth.

def normaliseFrame(frame, bbox):

normVals = np.full(len(bbox), frame.shape[0])

normVals[::2] = frame.shape[1]

return (np.clip(np.array(bbox), 0, 1) \* normVals).astype(int)

#------------------------------------------------------------------------------

# # Get the last frame from the camera after the user presses 'q'

#

def getLastFrame():

try:

# Create pipeline

pipeline = depthai.Pipeline()

# Assemble the pipeline and its components:

# Create camera node

cam_rgb = pipeline.create(depthai.node.ColorCamera)

cam_rgb.setPreviewSize(300, 300)

cam_rgb.setInterleaved(False)

# Create neural network node

detection_nn = pipeline.create(depthai.node.MobileNetDetectionNetwork)

# Set path of the blob (NN model). The blob converter converts and downloads

# the model

detection_nn.setBlobPath(blobconverter.from_zoo(name='mobilenet-ssd', shaves=6))

# Set the confidence threshold to filter out incorrect results

detection_nn.setConfidenceThreshold(0.5)

# Connect a color camera preview output to the neural network input

cam_rgb.preview.link(detection_nn.input)

# Allow the host to receive camera output (frames) via XLink

# Create an XLinkOut node

xlink_out_cam = pipeline.create(depthai.node.XLinkOut)

xlink_out_cam.setStreamName("rgb")

cam_rgb.preview.link(xlink_out_cam.input)

# Allow the host to receive neural network output (detections) via XLink

# Create an XLinkOut node

xlink_out_nn = pipeline.create(depthai.node.XLinkOut)

xlink_out_nn.setStreamName("nn")

detection_nn.out.link(xlink_out_nn.input)

# Set up the camera

last_frame = None

with depthai.Device(pipeline) as device:

# Display camera features

print('Connected cameras:', device.getConnectedCameraFeatures())

# Display out usb speed

print('Usb speed:', device.getUsbSpeed().name)

# Bootloader version

if device.getBootloaderVersion() is not None:

print('Bootloader version:', device.getBootloaderVersion())

# Device name

print('Device name:', device.getDeviceName())

# Access camera and neural network output on the host side

print("ACCESSING CAMERA AND NN OUTPUT")

# Queue for receiving camera out over XLink

que_cam_rgb = device.getOutputQueue("rgb") #(name="rgb", maxSize=1, blocking=False)

# Queue for receiving neursl network output over XLink

que_nn = device.getOutputQueue("nn") #(name="nn", maxSize=1, blocking=False)

# Create queue consumers

frame = None

detections = []

# Continue looping until user presses "q"

loop = True

iterations = 0

while loop:

# Fetch the latest output from the camera node and the nn node from

# the respective queues

# tryGet method returns either the latest result or None if the queue is empty.

# Results from the camera and neural network are of type numpy ndarray,

# specificically 1D array in this case. Both sets of output require

# transformation for them to be useful.

# display.

# Frame normalisation is one of the required transformations

# Normalise the frame/image data.

# Receive a frame from the camera.

# This is a blocking call, i.e. it will wait until new data has arrived

in_cam_rgb = que_cam_rgb.tryGet()

if in_cam_rgb is not None:

frame = in_cam_rgb.getCvFrame()

# MobileNetSSD output fields:

# image_id

# label

# confidence

# x_min,

# y_min,

# x_max,

# y_max

# Receive the output from the neural network

in_nn = que_nn.tryGet()

if in_nn is not None:

detections = in_nn.detections

# By accessing the detections array, we receive the detected

# objects that allow us to access these fields

# Display the results

if frame is not None:

for detection in detections:

bbox = normaliseFrame(frame, (detection.xmin, detection.ymin, detection.xmax, detection.ymax))

cv2.rectangle(frame, (bbox[0], bbox[1]), (bbox[2], bbox[3]), (255, 0, 0), 2)

cv2.imshow("preview", frame)

last_frame = frame

# Count the number of iterations.

iterations += 1

print("Iteration = " + str(iterations))

if cv2.waitKey(1) == ord("q"):

break

loop = False

return last_frame

except Exception as e:

return e

#------------------------------------------------------------------------------