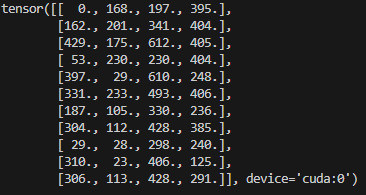

I have a bbox array on host...

And here is the code, so How can I send the array of bboxes (TopRight and ButtomLeft) to config.roi = dai.Rect(topLeft, bottomRight) at once ? I mean all bounding boxes of an image at once to the queue.

#!/usr/bin/env python3

import cv2

import depthai as dai

import numpy as np

from ultralytics import YOLO

# Load a model

model = YOLO('yolov8s-pose.pt') # load an official model

stepSize = 0.05

newConfig = False

# Create pipeline

pipeline = dai.Pipeline()

# Define sources and outputs

monoLeft = pipeline.create(dai.node.MonoCamera)

monoRight = pipeline.create(dai.node.MonoCamera)

stereo = pipeline.create(dai.node.StereoDepth)

spatialLocationCalculator = pipeline.create(dai.node.SpatialLocationCalculator)

xoutDepth = pipeline.create(dai.node.XLinkOut)

xoutSpatialData = pipeline.create(dai.node.XLinkOut)

xinSpatialCalcConfig = pipeline.create(dai.node.XLinkIn)

xoutDepth.setStreamName("depth")

xoutSpatialData.setStreamName("spatialData")

xinSpatialCalcConfig.setStreamName("spatialCalcConfig")

# Properties

monoLeft.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

monoLeft.setCamera("left")

monoRight.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

monoRight.setCamera("right")

stereo.setDefaultProfilePreset(dai.node.StereoDepth.PresetMode.HIGH_DENSITY)

stereo.setLeftRightCheck(True)

stereo.setSubpixel(True)

# Config

topLeft = dai.Point2f(0.4, 0.4)

bottomRight = dai.Point2f(0.6, 0.6)

config = dai.SpatialLocationCalculatorConfigData()

config.depthThresholds.lowerThreshold = 100

config.depthThresholds.upperThreshold = 10000

calculationAlgorithm = dai.SpatialLocationCalculatorAlgorithm.MEDIAN

config.roi = dai.Rect(topLeft, bottomRight)

spatialLocationCalculator.inputConfig.setWaitForMessage(False)

spatialLocationCalculator.initialConfig.addROI(config)

# Linking

monoLeft.out.link(stereo.left)

monoRight.out.link(stereo.right)

spatialLocationCalculator.passthroughDepth.link(xoutDepth.input)

stereo.depth.link(spatialLocationCalculator.inputDepth)

spatialLocationCalculator.out.link(xoutSpatialData.input)

xinSpatialCalcConfig.out.link(spatialLocationCalculator.inputConfig)

# Connect to device and start pipeline

with dai.Device(pipeline) as device:

device.setIrLaserDotProjectorBrightness(765) # in mA, 0..1200

device.setIrFloodLightBrightness(1200) # in mA, 0..1500

# Output queue will be used to get the depth frames from the outputs defined above

depthQueue = device.getOutputQueue(name="depth", maxSize=4, blocking=False)

spatialCalcQueue = device.getOutputQueue(name="spatialData", maxSize=4, blocking=False)

spatialCalcConfigInQueue = device.getInputQueue("spatialCalcConfig")

color = (255, 255, 255)

print("Use WASD keys to move ROI!")

while True:

inDepth = depthQueue.get() # Blocking call, will wait until a new data has arrived

depthFrame = inDepth.getFrame() # depthFrame values are in millimeters

depth_downscaled = depthFrame[::4]

if np.all(depth_downscaled == 0):

min_depth = 0 # Set a default minimum depth value when all elements are zero

else:

min_depth = np.percentile(depth_downscaled[depth_downscaled != 0], 1)

max_depth = np.percentile(depth_downscaled, 99)

depthFrameColor = np.interp(depthFrame, (min_depth, max_depth), (0, 255)).astype(np.uint8)

depthFrameColor = cv2.applyColorMap(depthFrameColor, cv2.COLORMAP_HOT)

results = model("E:/DEPTHAI_FREELANCE/OAKDEV/image.jpg")

for result in results:

keypoints = result.keypoints.xy

boxes = result.boxes.xyxy

img = result.orig_img

print(boxes)

#config.roi = dai.Rect(topLeft, bottomRight)

#config.calculationAlgorithm = calculationAlgorithm

#cfg = dai.SpatialLocationCalculatorConfig()

#cfg.addROI(config)

#spatialCalcConfigInQueue.send(cfg)

spatialData = spatialCalcQueue.get().getSpatialLocations()

for depthData in spatialData:

roi = depthData.config.roi

roi = roi.denormalize(width=depthFrameColor.shape[1], height=depthFrameColor.shape[0])

xmin = int(roi.topLeft().x)

ymin = int(roi.topLeft().y)

xmax = int(roi.bottomRight().x)

ymax = int(roi.bottomRight().y)

depthMin = depthData.depthMin

depthMax = depthData.depthMax

fontType = cv2.FONT_HERSHEY_TRIPLEX

cv2.rectangle(depthFrameColor, (xmin, ymin), (xmax, ymax), color, 1)

cv2.putText(depthFrameColor, f"X: {int(depthData.spatialCoordinates.x)} mm", (xmin + 10, ymin + 20), fontType, 0.5, color)

cv2.putText(depthFrameColor, f"Y: {int(depthData.spatialCoordinates.y)} mm", (xmin + 10, ymin + 35), fontType, 0.5, color)

cv2.putText(depthFrameColor, f"Z: {int(depthData.spatialCoordinates.z)} mm", (xmin + 10, ymin + 50), fontType, 0.5, color)

Regards!