Hey @jakaskerl, thank you for your help.

Let me give you some more context :

- I need two (ideally 1080p) RGB images because I am trying to use the camera for VR teleoperation. The idea behind concatenating the two images is that it makes things easier for the synchronization of the two images. I just encode a single stream containing both images, send it over the network and split it on the host side (that's the idea anyways, assuming the images are hardware synchronized).

- I am using a OAK-FFC-4P currently with two IMX378 modules (we may want to add more in the future).

Using preview instead of ISP, and adding a few things that were in the script you sent last, I still get an out of memory error :

Traceback (most recent call last):

File "<...>/test_concat_nn.py", line 41, in <module>

with dai.Device(pipeline) as device:

RuntimeError: NeuralNetwork(2) - Out of memory while creating pool for resulting tensor. Number of frames: 8 each with size: 49766400B

Here is the current state of the script

pipeline = dai.Pipeline()

camLeft = pipeline.create(dai.node.ColorCamera)

camLeft.setBoardSocket(dai.CameraBoardSocket.CAM_A)

camLeft.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

camLeft.setFps(30)

camLeft.setPreviewSize(1920, 1080)

camLeft.setInterleaved(False)

camLeft.setImageOrientation(dai.CameraImageOrientation.ROTATE_180_DEG)

camLeft.setColorOrder(dai.ColorCameraProperties.ColorOrder.BGR)

camRight = pipeline.create(dai.node.ColorCamera)

camRight.setBoardSocket(dai.CameraBoardSocket.CAM_D)

camRight.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

camRight.setFps(30)

camRight.setPreviewSize(1920, 1080)

camRight.setInterleaved(False)

camRight.setImageOrientation(dai.CameraImageOrientation.ROTATE_180_DEG)

camRight.setColorOrder(dai.ColorCameraProperties.ColorOrder.BGR)

# Concatenation NN

nn = pipeline.create(dai.node.NeuralNetwork)

nn.setBlobPath("../utils/generate_models/models/concat_openvino_2022.1_6shave.blob")

nn.setNumInferenceThreads(2)

camLeft.preview.link(nn.inputs['left'])

camRight.preview.link(nn.inputs['right'])

nn_xout = pipeline.create(dai.node.XLinkOut)

nn_xout.setStreamName("concat")

nn.out.link(nn_xout.input)

# Pipeline is defined, now we can connect to the device

with dai.Device(pipeline) as device:

qNn = device.getOutputQueue(name="concat", maxSize=4, blocking=False)

shape = (3, 1080, 1920 * 2)

while True:

inNn = np.array(qNn.get().getData())

print(inNn.shape)

frame = inNn.view(np.float16).reshape(shape).transpose(1, 2, 0).astype(np.uint8).copy()

cv2.imshow("Concat", frame)

if cv2.waitKey(1) == ord('q'):

break

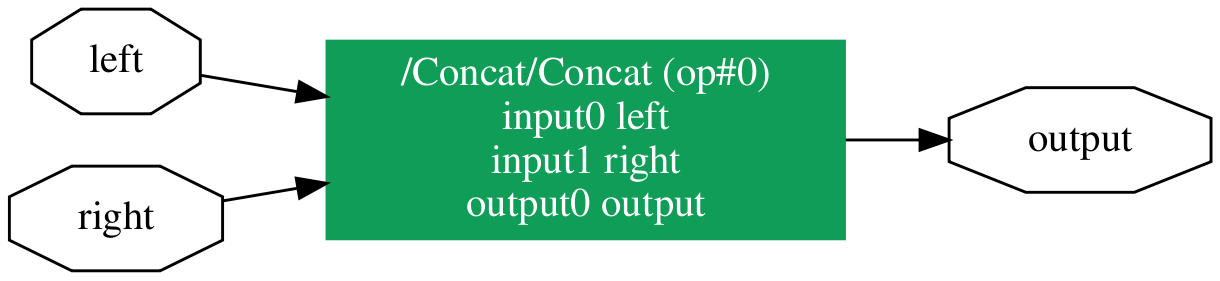

Maybe the issue comes from the way I built the nn ? this is the script I used

#! /usr/bin/env python3

from pathlib import Path

import torch

from torch import nn

import blobconverter

class CatImgs(nn.Module):

def forward(self, left, right):

return torch.cat((left, right), 3)

# Define the expected input shape (dummy input)

shape = (1, 3, 1920*2, 1080)

X = torch.ones(shape, dtype=torch.float32)

path = Path("out/")

path.mkdir(parents=True, exist_ok=True)

onnx_file = "out/concat.onnx"

print(f"Writing to {onnx_file}")

torch.onnx.export(

CatImgs(),

(X, X),

onnx_file,

opset_version=12,

do_constant_folding=True,

input_names=["left", "right"], # Optional

output_names=["output"], # Optional

)

# No need for onnx-simplifier here

# Use blobconverter to convert onnx->IR->blob

blobconverter.from_onnx(

model=onnx_file,

data_type="FP16",

shaves=6,

use_cache=False,

output_dir="./models",

optimizer_params=[],

)

I get the following graph

Thank you very much