Here is the full script :

#!/usr/bin/env python3

import depthai as dai

import subprocess as sp

from os import name as osName

import argparse

import sys

import pickle

parser = argparse.ArgumentParser()

parser.add_argument(

"-u",

"--unwrap",

default=False,

action="store_true",

help="Activate imagemanip unwrapping",

)

parser.add_argument(

"-v",

"--verbose",

default=False,

action="store_true",

help="Prints latency for the encoded frame data to reach the app",

)

args = parser.parse_args()

# Create pipeline

pipeline = dai.Pipeline()

# Define sources and output

camRgb = pipeline.create(dai.node.ColorCamera)

videoEnc = pipeline.create(dai.node.VideoEncoder)

xout = pipeline.create(dai.node.XLinkOut)

xout.setStreamName("enc")

# Properties

camRgb.setBoardSocket(dai.CameraBoardSocket.RGB)

camRgb.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

camRgb.setFps(30)

camRgb.setImageOrientation(dai.CameraImageOrientation.ROTATE_180_DEG)

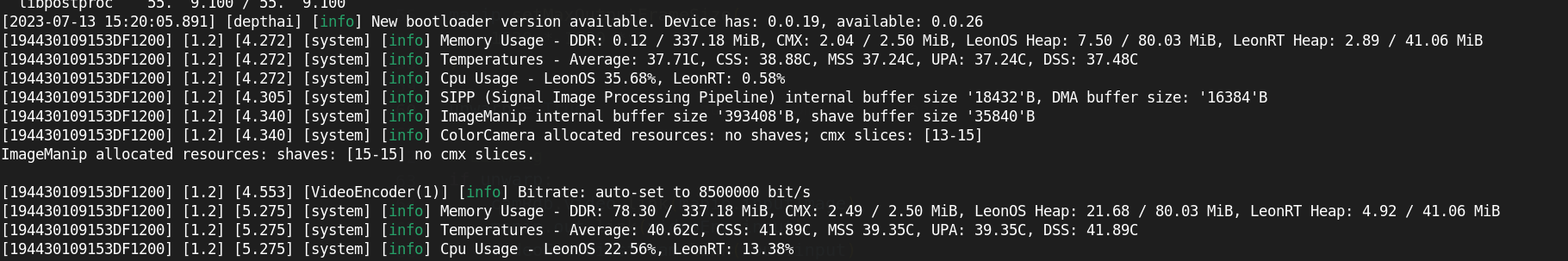

videoEnc.setDefaultProfilePreset(

camRgb.getFps(), dai.VideoEncoderProperties.Profile.H264_MAIN

)

# Unwarping imagemanip

manip = pipeline.createImageManip()

mesh, meshWidth, meshHeight = pickle.load(open("mesh.pckl", "rb"))

manip.setWarpMesh(mesh, meshWidth, meshHeight)

manip.setMaxOutputFrameSize(1920 * 1080 * 3)

# Linking

if args.unwrap:

camRgb.video.link(manip.inputImage)

manip.out.link(videoEnc.input)

videoEnc.bitstream.link(xout.input)

else:

camRgb.video.link(videoEnc.input)

videoEnc.bitstream.link(xout.input)

width, height = 720, 500

command = [

"ffplay",

"-i",

"-",

"-x",

str(width),

"-y",

str(height),

"-framerate",

"60",

"-fflags",

"nobuffer",

"-flags",

"low_delay",

"-framedrop",

"-strict",

"experimental",

]

if osName == "nt": # Running on Windows

command = ["cmd", "/c"] + command

try:

proc = sp.Popen(command, stdin=sp.PIPE) # Start the ffplay process

except:

exit("Error: cannot run ffplay!\nTry running: sudo apt install ffmpeg")

# Connect to device and start pipeline

with dai.Device(pipeline) as device:

# Output queue will be used to get the encoded data from the output defined above

q = device.getOutputQueue(name="enc", maxSize=30, blocking=True)

try:

while True:

pkt = q.get() # Blocking call, will wait until new data has arrived

data = pkt.getData()

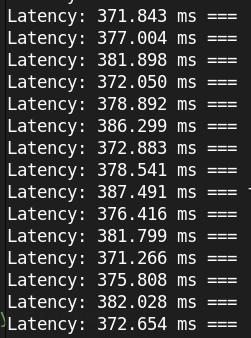

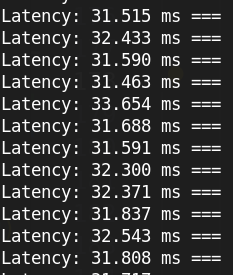

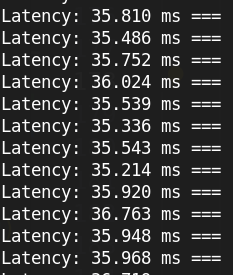

if args.verbose:

latms = (dai.Clock.now() - pkt.getTimestamp()).total_seconds() * 1000

# Writing to a different channel (stderr)

# Also `ffplay` is printing things, adding a separator

print(f"Latency: {latms:.3f} ms === ", file=sys.stderr)

proc.stdin.write(data)

except:

pass

proc.stdin.close()

You will also need "mesh.pckl"

Thanks !