Hi erik,

Ok. But using the "dephtai/dephtai_demo.py", I can confirm that 20cm or 30 cm works perfectly. The problem is when I try to make my own script or use an example from "depthai-python/examples/"

Can you help me?

Hi erik,

Ok. But using the "dephtai/dephtai_demo.py", I can confirm that 20cm or 30 cm works perfectly. The problem is when I try to make my own script or use an example from "depthai-python/examples/"

Can you help me?

Hi LeonelSimo

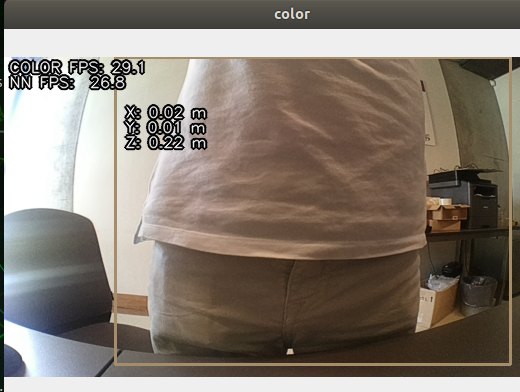

Best way to check the accuracy is to look at the depth/disparity map. If it looks random, then the device is unable to correctly infer depth from the two stereo cameras (meaning wrong xyz), since the object is in the "blind spot" of one of the two cameras.

Only if the depth map looks somewhat homogeneous at objects position will you be able to correctly infer depth.

Thanks,

Jaka

The big question is, when I use the "dephtai_demo.py" works perfectly (the Z value is ok), but when I try an example from depthai-python/examples/ the Z value had errors, especially for closest distances.

Why? Can you provide a script example?

Hi LeonelSimo

It might be because you are using the depth align example. Does unaltered https://github.com/luxonis/depthai-experiments/blob/master/gen2-calc-spatials-on-host/main.py work like expected? What about https://docs.luxonis.com/projects/api/en/latest/samples/SpatialDetection/spatial_location_calculator/?

Thanks,

Jaka

Hi jakaskerl

These examples work very well. However, when I change the resolution from THE_400_P to THE_720_P or THE_800_P, the results are extremely bad.

In the final, I want to align the depth with the color frame! I intend to use the maximum FOV, do NN inference, and get the XYZ. Maybe the THE_400_P could work, but how can I decrease the color resolution to THE_400_P since the color OV9782 only supports THE_800_P and THE_720_P?

Thanks

Hi LeonelSimo ,

You can use isp scaling:

camRgb.setBoardSocket(dai.CameraBoardSocket.CAM_A)

camRgb.setResolution(dai.ColorCameraProperties.SensorResolution.THE_800_P)

camRgb.setIspScale(1,2) # 800P to 400PHi LeonelSimo

I don't think so. But you should be able to do it host side by looking at the noisiness of the disparity frame.

Thoughts?

Jaka

Hi jakaskerl ,

Yes, the idea is to do it on the host. How do we check the noisiness of the disparity frame?

LeonelSimo You can visualize the (colorized) disparity frame, example here: https://docs.luxonis.com/projects/api/en/latest/samples/StereoDepth/depth_preview/#depth-preview

Hi erik

I have already seen that example and I have tested it. The question is, how do we analyze the disparity frame by python code and say there is a lot of noise? There is an example code?

Thanks

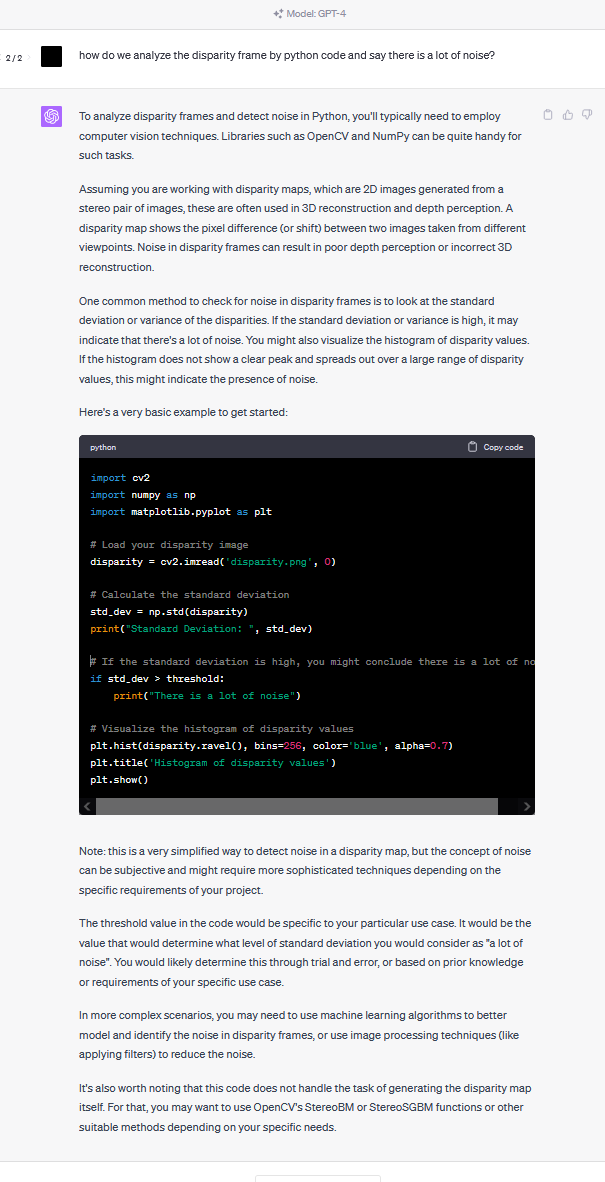

LeonelSimo Such non-luxonis-specific (generic) questions can be consulted with chatgpt, which will provide more accurate and faster response: