Hi,

We were working on OAK-FFC-4P to develop 400p with two stereo pair.After several rounds of trial and error, we found that certain stereo settings ('BilateralFilterSigma' , 'DecimationMode' )may cause high latency in pipeline.

We know that the node may block the pipeline.Maybe we have reached the limitation? Is there any way to avoid this problem?

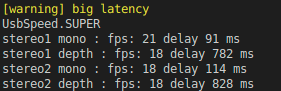

High latency

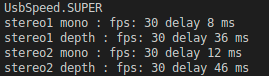

Without 'setBilateralFilterSigma' and 'DecimationMode.NON_ZERO_MEDIAN' setting:

The structure of our code is create two pairs of stereo and add mono and depth callback to calculate latency respectively for each stereo pair.

#!/usr/bin/env python3

import os

# os.environ["DEPTHAI_LEVEL"] = "debug"

import depthai as dai

import cv2

import os

os.environ["DEPTHAI_LEVEL"] = "debug"

import queue

from datetime import datetime

import numpy as np

import time

def applyDepthsetting(depth_cam):

depth_cam.setDefaultProfilePreset(dai.node.StereoDepth.PresetMode.HIGH_ACCURACY)

depth_cam.initialConfig.setMedianFilter(dai.MedianFilter.KERNEL_5x5)

depth_cam.initialConfig.setBilateralFilterSigma(10) #May cause big latency

depth_cam.initialConfig.setLeftRightCheckThreshold(5)

depth_cam.initialConfig.setConfidenceThreshold(50)

depth_cam.setLeftRightCheck(True)

depth_cam.setExtendedDisparity(False)

depth_cam.setSubpixel(True)

config = depth_cam.initialConfig.get()

config.postProcessing.thresholdFilter.minRange = 10

config.postProcessing.thresholdFilter.maxRange = 20000

config.postProcessing.speckleFilter.enable = False

config.postProcessing.speckleFilter.speckleRange = 200

config.postProcessing.spatialFilter.enable = False

config.postProcessing.spatialFilter.holeFillingRadius = 2

config.postProcessing.spatialFilter.numIterations = 1

config.postProcessing.temporalFilter.enable = False

config.postProcessing.temporalFilter.delta = 50

config.postProcessing.temporalFilter.alpha = 0.4

config.postProcessing.decimationFilter.decimationFactor = 3

DS_Factor = config.postProcessing.decimationFilter.decimationFactor

# config.postProcessing.decimationFilter.decimationMode = dai.RawStereoDepthConfig.PostProcessing.DecimationFilter.DecimationMode.PIXEL_SKIPPING #work fine

config.postProcessing.decimationFilter.decimationMode = dai.RawStereoDepthConfig.PostProcessing.DecimationFilter.DecimationMode.NON_ZERO_MEDIAN #May cause big latency

depth_cam.initialConfig.set(config)

pass

def applay_mono_setting(cam):

cam.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

cam.setFps(30)

cam.setNumFramesPool(10)

def createpipeline(useStereo1,useStereo2):

pipeline = dai.Pipeline()

#

# Stereo 1

#

if(useStereo1):

left_1 = pipeline.createMonoCamera()

left_1.setBoardSocket(dai.CameraBoardSocket.CAM_A)

applay_mono_setting(left_1)

right_1 = pipeline.createMonoCamera()

right_1.setBoardSocket(dai.CameraBoardSocket.CAM_D)

applay_mono_setting(right_1)

depth_1 = pipeline.create(dai.node.StereoDepth)

# depth_1.setInputResolution(640,400)

left_1.out.link(depth_1.left)

right_1.out.link(depth_1.right)

applyDepthsetting(depth_1)

xoutdepth_1 = pipeline.create(dai.node.XLinkOut)

xoutdepth_1.setStreamName("depth_1")

depth_1.depth.link(xoutdepth_1.input)

xoutLeft_1 = pipeline.create(dai.node.XLinkOut)

xoutLeft_1.setStreamName("left_1")

right_1.out.link(xoutLeft_1.input)

#

# Stereo 2

#

if (useStereo2):

left_2 = pipeline.createMonoCamera()

left_2.setBoardSocket(dai.CameraBoardSocket.CAM_B)

applay_mono_setting(left_2)

right_2 = pipeline.createMonoCamera()

right_2.setBoardSocket(dai.CameraBoardSocket.CAM_C)

applay_mono_setting(right_2)

depth_2 = pipeline.create(dai.node.StereoDepth)

# depth_2.setInputResolution(640,400)

left_2.out.link(depth_2.left)

right_2.out.link(depth_2.right)

applyDepthsetting(depth_2)

xoutdepth_2 = pipeline.create(dai.node.XLinkOut)

xoutdepth_2.setStreamName("depth_2")

depth_2.depth.link(xoutdepth_2.input)

xoutLeft_2 = pipeline.create(dai.node.XLinkOut)

xoutLeft_2.setStreamName("left_2")

right_2.out.link(xoutLeft_2.input)

return pipeline

def callbackLeft_1(cb):

global qLeft_1,left_1_cnt

frame = cb.getCvFrame()

delay = (dai.Clock.now()-cb.getTimestamp()).total_seconds()

qLeft_1.put({"delay":delay,"frame":frame})

left_1_cnt +=1

def callbackLeft_2(cb):

global qLeft_2,left_2_cnt

frame = cb.getCvFrame()

delay = (dai.Clock.now()-cb.getTimestamp()).total_seconds()

qLeft_2.put({"delay":delay,"frame":frame})

left_2_cnt +=1

def callbackDepth_1(cb):

global qDepth_1,depth_1_cnt

frame = cb.getCvFrame()

delay = (dai.Clock.now()-cb.getTimestamp()).total_seconds()

qDepth_1.put({"delay":delay,"frame":frame})

depth_1_cnt +=1

def callbackDepth_2(cb):

global qDepth_2,depth_2_cnt

frame = cb.getCvFrame()

delay = (dai.Clock.now()-cb.getTimestamp()).total_seconds()

qDepth_2.put({"delay":delay,"frame":frame})

depth_2_cnt +=1

def main():

'''

Arguments

'''

showImg = False

useStereo1 = True

useStereo2 = True

pipeline = createpipeline(useStereo1=useStereo1,useStereo2=useStereo2)

device = dai.Device(pipeline)

usbSpeed=device.getUsbSpeed()

global qDepth_1,qDepth_2,depth_1_cnt,depth_2_cnt,qLeft_1,qLeft_2,left_2_cnt,left_1_cnt

##

depth_1_cnt = 0

depth_2_cnt = 0

left_2_cnt = 0

left_1_cnt = 0

delay_depth_1 = 0

delay_depth_2 = 0

delay_left_1 = 0

delay_left_2 = 0

##

qDepth_1 = queue.Queue()

qDepth_2 = queue.Queue()

qLeft_1 = queue.Queue()

qLeft_2 = queue.Queue()

if useStereo1:

device.getOutputQueue("depth_1",4,False).addCallback(callbackDepth_1)

device.getOutputQueue("left_1",4,False).addCallback(callbackLeft_1)

if useStereo2:

device.getOutputQueue("depth_2",4,False).addCallback(callbackDepth_2)

device.getOutputQueue("left_2",4,False).addCallback(callbackLeft_2)

last = dai.Clock.now()

dt = 0

YELLOW = '\033[33m'

Color_RESET = '\033[0m'

while(True):

now = dai.Clock.now()

dt = (now-last).total_seconds()

if(dt>1):

if (delay_depth_1>300 or delay_depth_2 >300):

print(YELLOW+"[warning] big latency"+Color_RESET)

print(f"{usbSpeed}")

print(f"stereo1 mono : fps: {left_1_cnt} delay {delay_left_1} ms")

print(f"stereo1 depth : fps: {depth_1_cnt} delay {delay_depth_1} ms")

print(f"stereo2 mono : fps: {left_2_cnt} delay {delay_left_2} ms")

print(f"stereo2 depth : fps: {depth_2_cnt} delay {delay_depth_2} ms")

depth_1_cnt=0

depth_2_cnt=0

left_2_cnt=0

left_1_cnt=0

last = now

if not qDepth_1.empty():

dataDepth_1 = qDepth_1.get(block=False)

delay_depth_1 = round(dataDepth_1["delay"]*1e3)

if showImg:

frameDepth_1 = dataDepth_1["frame"]

cv2.imshow("depth_1",frameDepth_1)

if not qDepth_2.empty():

dataDepth_2 = qDepth_2.get(block=False)

delay_depth_2 = round(dataDepth_2["delay"]*1e3)

if showImg:

frameDepth_2 = dataDepth_2["frame"]

cv2.imshow("depth_2",frameDepth_2)

if not qLeft_1.empty():

dataLeft_1 = qLeft_1.get(block=False)

delay_left_1 = round(dataLeft_1["delay"]*1e3)

if showImg:

frameLeft_1 = dataLeft_1["frame"]

cv2.imshow("left_1",frameLeft_1)

if not qLeft_2.empty():

dataLeft_2 = qLeft_2.get(block=False)

delay_left_2 = round(dataLeft_2["delay"]*1e3)

if showImg:

frameLeft_2 = dataLeft_2["frame"]

cv2.imshow("left_2",frameLeft_2)

if cv2.waitKey(1) == ord('q'):

break

cv2.destroyAllWindows()

if __name__ == "__main__":

main()