What model is used in the person tracking example video?

- Best Answerset by erik

Hi AdamPolak ,

It's the person-detection-retail-0013. You can check the code here: https://discuss.luxonis.com/blog/1316-people-tracking-counting-with-sdk

Thanks, Erik

thank you!

- Edited

Is it possible to use sequence numbers with videos to be able to sync detections with received videos to display?

I don't see any examples of streaming video from device and then displaying frames on host.

Would pulling the video align with preview when sent to host down stream? Should I link the video feed to Xlinkk out in order to send compressed frames?

I used ImageManip to reduce the frame sizes by resizing the pixels but it seems to have slowed down the fps further?

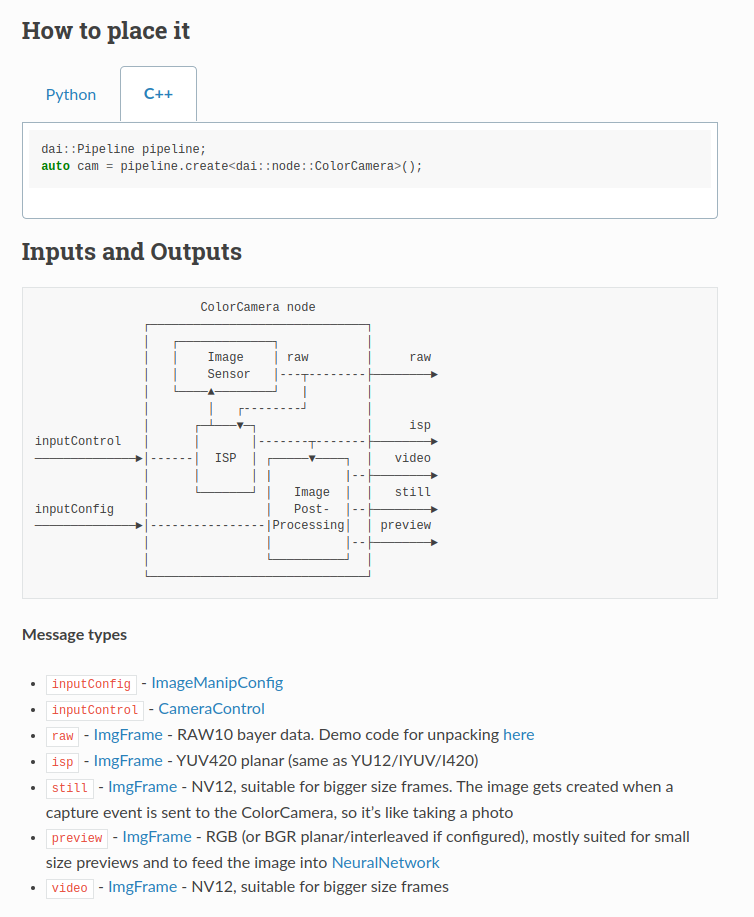

// ColorCamera setup

camRgb = pipeline->create<dai::node::ColorCamera>();

camRgb->setPreviewSize(st->rgb_dai_preview_x, st->rgb_dai_preview_y);

camRgb->setResolution(st->rgb_dai_res);

camRgb->setInterleaved(false);

camRgb->setColorOrder(dai::ColorCameraProperties::ColorOrder::RGB);

camRgb->setBoardSocket(dai::CameraBoardSocket::RGB);

camRgbManip = pipeline->create<dai::node::ImageManip>(); // Here I will take the camRgb frames and downscale them before sending them out camRgbManip-> initialConfig.setResize(408, 240); camRgbManip->initialConfig.setFrameType(dai::ImgFrame::Type::BGR888p); camRgb->preview.link(camRgbManip->inputImage);

rgbOut = pipeline->create<dai::node::XLinkOut>(); rgbOut->setStreamName("rgb"); camRgbManip->out.link(rgbOut->input);

Does ImageManip take porcessing power away from nns?