Hi RyanLee

I'm not sure what you mean by "id does not match x, y value for me", could you please elaborate?

The demo works by calculating approximate spatial coordinates for a given region of interest. Ill use this demo to explain.

We run a pipeline and acquire a depth frame which stores depth data (distance from camera along Z axis) for each pixel inside the frame. This depth frame then gets passed to HostSpatialsCalc class which attempts to infer the position for every pixel.

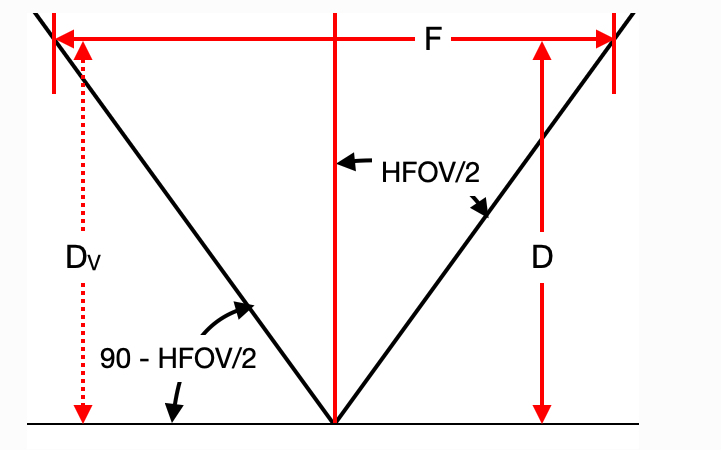

First it sets the center of the frame as coordinate frame origin. The center pixel therefore gets values (x=0, y=0, z=depth). Pixels (x and y) to the left and right from the origin get calculated based on camera FOV, their distance from the center and their depth using pythagorean theorem (since x and y depend on depth).

In short, calculate the angle from center to the desired pixel as seen by the observer (taking FOV into account) and multiplying that by depth.

source code:

def _calc_angle(self, frame, offset, HFOV):

return math.atan(math.tan(HFOV / 2.0) * offset / (frame.shape[1] / 2.0))

def calc_spatials(self, depthData, roi, averaging_method=np.mean):

depthFrame = depthData.getFrame()

roi = self._check_input(roi, depthFrame) # If point was passed, convert it to ROI

xmin, ymin, xmax, ymax = roi

# Calculate the average depth in the ROI.

depthROI = depthFrame[ymin:ymax, xmin:xmax]

inRange = (self.THRESH_LOW <= depthROI) & (depthROI <= self.THRESH_HIGH)

# Required information for calculating spatial coordinates on the host

HFOV = np.deg2rad(self.calibData.getFov(dai.CameraBoardSocket(depthData.getInstanceNum())))

averageDepth = averaging_method(depthROI[inRange])

centroid = { # Get centroid of the ROI

'x': int((xmax + xmin) / 2),

'y': int((ymax + ymin) / 2)

}

midW = int(depthFrame.shape[1] / 2) # middle of the depth img width

midH = int(depthFrame.shape[0] / 2) # middle of the depth img height

bb_x_pos = centroid['x'] - midW

bb_y_pos = centroid['y'] - midH

angle_x = self._calc_angle(depthFrame, bb_x_pos, HFOV)

angle_y = self._calc_angle(depthFrame, bb_y_pos, HFOV)

spatials = {

'z': averageDepth,

'x': averageDepth * math.tan(angle_x),

'y': -averageDepth * math.tan(angle_y)

Averaging like np.mean() is introduced since it gives a more precise result. Depth of a pixel is often very noisy and tends to jump around a lot, but this can be avoided (or at least improved) if we average out the depth of pixels inside a region we are interested in.

Hope this clears thing up a bit, let me know if you have any more questions.

Thanks,

Jaka